When it comes to scraping job data from the web, you could go with specific tools or software that do not need coding. However, in case you are scraping multiple websites, be it job boards or career-pages of companies, and if you keep adding new data sources regularly, you may face some constraints since there is only a level of customization that is possible with these tools. The better path to take, if you are intent on building your solution, is to use top programming languages and build your own scraping solution.

The Benefits Of Using Top Programming Languages Are:

- You can make use of third party libraries which make parsing web pages and extracting data more accessible. These libraries also have good developer support through websites like StackOverflow, where developers ask and answer questions and also discuss the best way to solve a particular problem.

- There exist tricks to fool websites into believing you are accessing them using a browser. This way and by using a time gap between hitting webpages of the same site, you can avoid getting blocked or your IP blacklisted.

- Since new features keep cropping up on the user interface front, you might need to change your code from time to time. This might be a little difficult since you would need to change your system but not impossible- since you can always try another library or new ways to scrape the data- there are no constraints.

- You can always decide to change your approach, move your web scraping engine to the cloud, or make other changes since any agreement with a third-party vendor does not restrict you.

Some Of The Top Programming Languages Are:

Python

The most popular language for scraping data from the web. Python is one of the easiest to master with a gentler learning curve. Its statements and commands are very similar to the English language. And someone with any idea of coding can master the language in a matter of a week. At the same time, there are multiple third-party libraries that used to scrape data from different types of websites.

One of the most commonly used libraries in Python is BeautifulSoup. This is the library that we have ourselves used in a lot of DIY articles that we have shared on our blogs. It is easy to understand and use. It makes parsing any HTML or XML page much easier since you can extract data inside specific HTML elements once you can find them manually. You can repeat the extraction procedure over multiple pages once you have found all the HTML elements that data needs extracting from. The code itself is very simple, and parsing a single webpage will enable you to parse any webpage. Another popular third-party library used in Python is Scrapy.

You can create automated bots and deploy them in your own cloud servers using this library. While it is slightly more complicated than the previous library, it does come with a ton of more features like crawl depth restriction and cookies and session handling. Handling complicated job boards where job data stored under web pages separated by, say, region or sector can be easier when using Scrapy.

Golang

Golang may not be the first option that comes to your mind when it comes to web scraping. Since it is a comparatively recent language that has a sharp learning curve. However, it makes up in terms of speed during concurrent scraping. I have used one of its third-party libraries Colly. And scraping thousands of webpages by crawling a particular website took less than a few minutes. Go is a compiled language and strongly, statically typed. Due to this reason Go code runs much faster compared to others like Python. Thanks to Goroutuines, you can run multiple threads that can scrape data from web pages in parallel. Go also benefits from the fact that you can write single page scripts that can access web pages and scrape data without the requirement for a framework.

Node.JS

Node.JS is particularly preferred for crawling web-pages that use dynamic programming to render the UI. Since every Node.JS process takes up a code on the CPU, you need to make sure that you are using a multi-core CPU when running multiple Node.JS processes in parallel. Node.JS is not recommended for complex processes that will scrape data from multiple web pages constantly. Used mostly for shorter one time scraping jobs. You can use it if you need to scrape a particular job board once and get all the data. It also comes with the support of multiple third-party libraries such as Cheerio which helps traverse web pages and extract the data.

Ruby

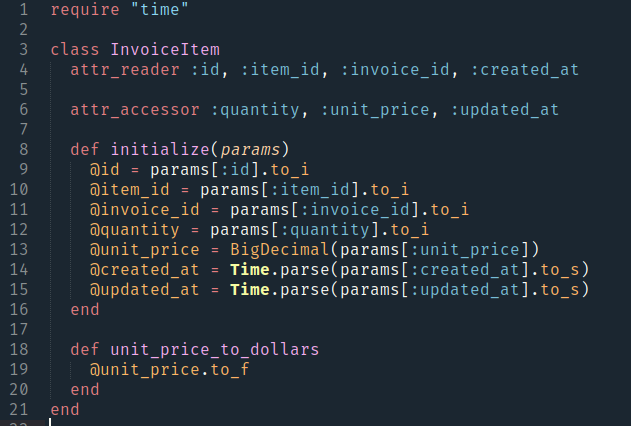

While Ruby itself might take some time to master. Ruby on Rails is relatively easier and used to create web scraping applications with relative speed. Rails provide features like scaffolding that helps in quicker development and turn around times. Rubygems like HTTParty and NokoGiri can help you with your web-scraping efforts. While the first library helps in making HTTP calls and getting the HTML data. The second one helps parse HTML, XML, and other pages and comes with CSS selector support.

The language you use depends mainly on your level of comfort and your years of experience with that language. It depends on the web scraping team as to which language and libraries it has used earlier. However, some languages have more third party libraries and better developer support. And these need accounting for before you begin your project. Also, in case you are thinking of deploying your code in the cloud. As service in an AWS EC2 or as a serverless Lambda. You need to make sure that your language supported by the cloud service that you are using. Making sure of these things early on when building your web scraping solution will make the journey towards using it seamlessly and adding newer websites as and when required and maintaining it much easier.

These are the different types of top programming languages that are used by us daily. These top programming languages used to scrape job data from different websites. Choose JobsPikr for different job data requirements from different websites.