Job Boards and job agencies are helping our generation tackle one of the greatest problems in today’s world — unemployment. The difficulty for fresh graduates or even those who are seeking new jobs isn’t always the lack of a job, but instead the difficulty in finding the right one. Job parser for job Boards in particular have proved helpful by aggregating jobs from thousands of job sites and company career pages. But the ability of a Job Board to help candidates is based on how well presented and updated the data on its website is.

Since most Job Boards use an internally handled or an externally subscribed Job Parser, data quality is often questionable. The problem with Job Parsers is that they lack an inbuilt intelligence. They can gather job listings from as many websites as you add to their system, but when pulling the data points related to each job listing, it is not able to ensure the correctness of the data. It may not be able to interpret data points from different websites in the same way, and this may lead to unclean, or non-uniform data on your website, which in turn would inconvenience candidates.

How Reliable is a Job Parser?

Job Parsers collect job data from different websites but are unable to provide any intelligence to interpret the data that it extracts. For example, suppose one website lists the required experience in months while other listing websites do it in years. A job parser would not be able to convert the experience required to years for the one where it is given in months.

Let’s take another example. Suppose there are two job listings- one for a Python Developer and another for a Backend Developer, where the must-have is Python. Both essentially are listings for a software developer who codes in Python. But a Job Parser would not be able to add these specific tags to the job listings. And thus, someone who searches for the term “python developer” would probably not be able to see these listings together.

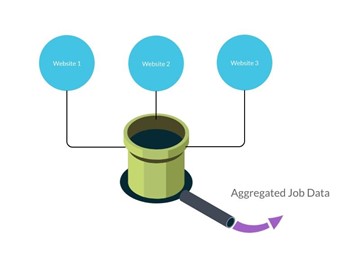

Using a job parser is a highly mechanical way of gathering job data for your website. It is an unintelligent box that scrapes job data from multiple websites, bunches them all together, and provides you with a single feed. Just as you can visualize in the image above. Its limitations are not just the fact that it cannot interpret the data it parses, but also that it cannot validate the data.

Take this for an example, just in case your parser encounters job listings of a company that doesn’t exist, it cannot use other data sources to validate whether the company is a genuine one. Job parsers can also produce job listings that may be stale or even expired since they lack the capabilities to weed out such listings.

Upgrading or Updating your Job Parser?

In case you are facing multiple bugs in your job parser, and the percentage of unclean data is rising, you may go in to update your parser, or make some configuration changes. You may even upgrade your parser by moving to better software, or by changing your in-house tool. These updates or upgrades will help you in the short term, but when you add a new website for scraping job data, or when one of the existing websites changes its UI, you will again have to take on the task of updating the parser.

Since the parser does not have built-in intelligence and errors and issues will only be spotted once the data flows into your website, you can encounter two major problems. First, You might have to make frequent changes. Second, the amount of bad data on your website will only be limited by how fast you can update the parser.

Due to these issues, a one time fix is not a solution and will not enable you to provide a better experience to your customers. When it comes to upgrading the user interface or adding new filters, it may require a one-time effort. But unless you keep updating the Job Parser itself, dirty data will leave the other features useless. For instance, if your job listings do not have the correct tags, a well thought out filtering system with multiple options will be unusable.

Also, if you are maintaining the parsing software on your infrastructure, you will need to maintain and update the infra based on the amount of data that you need to collect in a given time. As your data requirements grow, your infra efforts and costs will also increase.

How can a DaaS Solution Help?

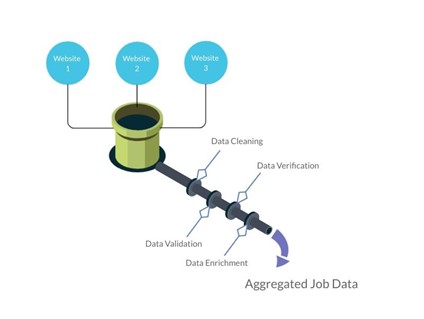

Based on what we have seen before, a Job Parser won’t reach the mark if you want to create a Job Board that stands out amongst the competition. Anyone can create a web scraper and generate aggregated job data from tens of websites. Developers can even write their DIY code to build such a system, given enough time. However, the process that we saw above needs a few more steps to truly create an end to end job feed that companies can use confidently and provide job seekers with the best experience. This is possible because the data produced at the end of this pipeline shown below is sorted, tagged, cleaned, validated, and verified. The result, in this case, can be vastly different from the pipeline that we saw above.

- Data Cleaning- A job scraping solution that provides you with a continuous job feed would automatically clean the data and check for imperfections. Job postings with erroneous data such as one with the last date of submission which lies in the past, may not be forwarded to you. Basic spelling checks and data quality checks are also made.

- Data Validation- Data Validation is very important to make sure candidates are not confused by mistakes in the job listing content. This can include different types of checks. If a data point should be numeric- like age or salary, then those checks are included in the data flow. If you only want job posts that have the salary included, then that can be used as a pre-filter for your job feed. You would want to make sure all the job posts have some specific data points that can be validated too.

- Data Verification- This step is not compulsory but can be used as an add on for providing even better results to job seekers. Certain checks like validating the companies that are mentioned in the job posts, or using Glassdoor data to recheck certain data points can be done to filter out any post that doesn’t fit the bill. These can be rejected entirely, or provided in a separate flow that would need manual verification.

- Data Enrichment– Not every website will provide you with the same key-value pairs. Not all will even list all the information in the same way. One site can have a salary in Dollars, while another can mention it in Euros. A single key can have different names on different websites. Required years of experience can also be Years of experience required in some websites.

Normalizing the data and collating data points from different websites that mean the same thing, can provide a more uniform job feed to your candidates and as they browse through multiple job listings, they can compare those more easily. Filters are also likely to work better when you enrich the Job Feed.

To Sum Up

Job Boards that want to take a leap, should move on from Job Parsers to Integrated Job Discovery Solutions. One of the leading data providers (as mentioned in a report by Forrester) in the Job Industry is our job scraping service, JobsPikr. We have been providing clean job feed to multiple clients who use the data for anything starting from Job Boards to Market Research. Having an end to end solution like ours would mean that you can focus on your core business of maintaining a Job Board and providing additional services to companies and candidates while we provide you with a continuous job feed in the format and storage medium of your choice (you could also opt for API integration). Having the best quality data is the key to any successful digital business and Job Boards can revolutionize the industry by transitioning from Job Parsers to automated Job Feed solutions.