**TL;DR**

Most AI models fail not because the algorithms are weak but because the training data is messy. This case study walks through how one enterprise workforce analytics team used JobsPikr’s clean, AI-ready job datasets to improve ai accuracy across skill extraction, salary prediction, and workforce trend models. By removing duplicates, fixing inconsistent job titles, stabilizing locations, and feeding the model fresh market signals, their predictions became more reliable, more consistent, and far easier to maintain.

Why AI Accuracy Falls Without Clean, Structured Job Data

Anyone who has worked with job data knows the truth: nothing derails an AI project faster than inconsistent inputs. Even the smartest model becomes unreliable when the dataset is full of outdated postings, broken titles, messy salaries, or missing skills. You can tune the model all you want, but if the inputs are flawed, the outputs won’t magically get better.

AI accuracy depends on one thing more than anything else: clean data. And in the context of job intelligence, the stakes are even higher. Titles evolve every quarter, companies label the same roles differently, and skills shift before most teams have even updated their taxonomies. Without a strong data foundation, your AI starts making strange jumps, predicting irrelevant skills, and misclassifying roles. If you’ve ever seen a model confuse a Product Analyst with a Product Owner, or group nursing roles with administrative positions, you’ve already seen what unstructured job datasets can do.

This is the problem the client in this case study was facing. Their internal data was reasonably clean, but it wasn’t enough. They needed external job datasets with structure, freshness, and depth. More importantly, they needed data they didn’t have to fix every week. That’s where JobsPikr stepped in.

The hidden cost of noisy job data

Messy job data doesn’t just lower accuracy. It inflates the time your team spends debugging. It forces analysts to manually correct titles. It creates inconsistent salary predictions, because the model has no sense of regional benchmarks. It even raises the cost of experimentation because you lose trust in how a model behaves when new data arrives.

Industry research backs this up. IBM has noted publicly that poor-quality data costs organizations nearly three trillion dollars per year globally, mainly due to bad decisions, manual fixes, and rework. While that stat covers all sectors, workforce analytics teams feel the pain more sharply because their systems rely on external market signals that change quickly.

This is why improving ai accuracy requires more than clever algorithms. It requires job datasets that are stable, structured, and continuously refreshed.

Why HR and talent intelligence teams feel the pain first

For HR analytics leaders, model errors don’t stay hidden inside a dashboard. They show up directly in business decisions. Compensation teams rely on accurate salary clustering. Talent intelligence teams rely on clean job taxonomies for competitive comparison. Forecasting teams need clear hiring trend signals to justify staffing plans.

When the underlying job data is flawed, every downstream model becomes fragile. And when an organization tries to scale AI across these functions, the problem only multiplies.

This is what pushed the client in our story to rethink their entire data foundation. Once they saw the type of stability that JobsPikr’s cleaned and normalized job datasets could provide, everything else started to fall into place.

Download the AI Readiness Index Report

Client Background: Who the Client Is and Why They Needed AI-Ready Job Datasets

The client is a large workforce analytics team inside a global enterprise. Their job is to help business leaders understand hiring patterns, skill trends, and compensation movements across several markets. They already had good internal HR data, but what they didn’t have was reliable external job-market context.

Like many analytics teams, they were building multiple AI models at once: skill extraction, role similarity, job clustering, and compensation prediction. These systems depend heavily on high-quality job datasets because job posting language changes quickly and varies dramatically between companies. The team had tried collecting job data on their own, but the manual cleaning was slowing down every experiment. They needed a source of AI-ready datasets that didn’t require weekly maintenance.

They also needed job data that was comprehensive enough to capture the full picture. Internal HR systems can show you your own history, but they can’t tell you how fast your competitors are hiring, what new roles are emerging, or which skills are suddenly appearing across your market. Their goal wasn’t just to build a model that worked in isolation. They wanted a model that could mirror real-world behavior.

This made the choice clear. They needed clean, structured job datasets built specifically for AI training. And they needed it from a partner that could handle both depth and reliability.

Their AI use case inside workforce analytics

The team was building a range of AI systems that depended on consistent inputs. Each one of these use cases suffered when the data was messy, incomplete, or outdated.

Skill Extraction Models: Their skill extraction pipeline was built using NLP and embedding-based models. But because job postings often contain noise, duplicated lines, inconsistent formatting, and irrelevant filler text, the model kept pulling skills that didn’t belong. It struggled with variations of the same skill or newly emerging hybrid skills that weren’t documented internally.

Role Similarity and Job Clustering Models: They were also building a role similarity engine to map job titles into standardized clusters. This required a diverse and rich dataset. When titles appeared in formats like “AI Engineer,” “AI Engg,” “Senior AI Specialist,” or even “AI Lead (Contract),” the model struggled to generalize because the training data lacked consistency.

Salary Benchmarking Models: Salary predictions were supposed to guide workforce planning and compensation design. However, missing or messy salary fields, inconsistent currency formats, and unreliable location data made the model unstable.

Trend Forecasting Models: Their demand forecasting engine needed fresh posting data to identify which roles were heating up or cooling down across markets. But the client’s data was outdated—some postings were months old, missing updated fields, or duplicated because different teams pulled data separately.

These challenges made one thing clear: if they wanted to improve AI accuracy, the starting point had to be the data. Algorithms couldn’t fix foundational issues. A better data pipeline could.

Want AI-Ready Job Datasets That Improve Model Accuracy?

See how JobsPikr can support your AI roadmap. Book a demo

The Problem: What Was Blocking the Client From Improving AI Accuracy

Before they started working with JobsPikr, the client had already invested in model development, cloud infrastructure, and internal data engineering. Their issue wasn’t a lack of technical skill or ambition. The real blocker was the dataset itself. Every time they trained or retrained a model, the results fluctuated wildly. Accuracy jumped up one week and dropped the next. They couldn’t replicate improvements consistently, and debugging took more time than experimentation.

Their biggest problem was simple: the job data they were using wasn’t clean enough, structured enough, or fresh enough to support high-quality AI training. Even small gaps in the inputs caused large deviations in predictions. Their AI team described it as “trying to build a city on sand.”

Training data problems are slowing model improvements

Most of the errors they were fighting traced back to the same root cause: inconsistent job posting data. Some of the issues were minor, but when they piled up, the combined effect was significant.

Irregular job titles: Titles came in dozens of formats. A single role could appear as “Data Eng,” “Data Engineer,” “Data Engineering (Remote),” and “Engineer – Data.” The model tried to treat each version as a unique role, which hurt clustering quality.

Missing or unstructured salary fields: Their salary prediction model needed clean compensation fields, but many postings had missing salary bands, currency mismatches, or the salary hidden inside unstructured text. This forced the team to either drop the data or manually clean it, both of which slowed progress.

Inconsistent location formats: The dataset contained variations like “NYC,” “New York,” “New York City,” “New York, NY,” and even “Manhattan – Hybrid.” When the model tried to map roles geographically, these inconsistencies reduced accuracy and created misleading clusters.

Duplicates and outdated postings: Because internal teams sourced job data from multiple vendors and manual pulls, duplicates were common. Outdated postings—sometimes months old—were still being included in training sets. This skewed recency-based models and created false signals.

Overly noisy text fields: Job descriptions were full of formatting issues, repeated lines, tracking pixels, messy HTML, and irrelevant content. The model kept extracting skills it shouldn’t have, simply because the text wasn’t pre-cleaned.

Individually, these issues look small. Together, they create unstable models. And the client had reached a point where every improvement required two steps of fixing for every one step of progress.

Internal data alone wasn’t enough

On top of the structural issues, the client was hitting the limits of internal HR data. Internal systems can tell you which roles you hired for. They cannot tell you:

- Which new titles are emerging in the broader market

- Which skills competitors are asking for

- How salary ranges are shifting across regions

- Which roles are rising or falling in demand

- What hybrid skill combinations are becoming the norm

This missing context created blind spots in every model. The client’s talent intelligence leaders knew that internal data was only one half of the story. To improve ai accuracy, they needed external market signals that were clean, stable, and delivered in a consistent format.

This is what led them to JobsPikr. They didn’t need “more data.” They needed trusted data, the type that lets models behave predictably and helps analysts make confident decisions.

The JobsPikr Approach: How JobsPikr Delivered Clean Job Datasets for Better Model Performance

The client didn’t need another firehose of raw job postings. They needed data that arrived already cleaned, standardized, enriched, and aligned to a schema that AI systems could trust. That’s exactly what JobsPikr delivered.

From day one, the goal wasn’t just “more data.” It was “better data.” The JobsPikr team mapped the client’s AI pipelines, identified where the models were breaking, and built a delivery method that addressed the root cause: data inconsistency. With a stable foundation in place, the client’s models had the structure they needed to improve ai accuracy without constant rework.

Clean data pipeline built for AI-ready datasets

JobsPikr’s pipeline isn’t a simple collection engine. It’s a full-quality lifecycle that starts with large-scale ingestion and ends with deeply usable job datasets.

Here’s what the client gained from the pipeline:

Title normalization that creates consistency rather than chaos:

JobsPikr maps thousands of title variations into clean, industry-standard equivalents. This immediately improved the client’s clustering model because similar roles finally looked similar. Instead of treating “Data Scientist II,” “Senior Data Scientist,” and “Lead Data Science Specialist” as unrelated roles, the model understood their hierarchy and structure.

Deduplication that removes noisy variants:

With strict fuzzy-matching rules, JobsPikr removes duplicates created by reposting, job board syndication, or slight text changes. This helped the client stop wasting compute on near-identical postings.

Location standardization that stabilizes geographic models:

Every job posting is mapped to structured fields like city, state, country, and standardized metro areas. When the model used this version of the data, the forecasting engine immediately became more consistent.

Skill extraction through pre-processing:

JobsPikr enriches the dataset with clean skill tokens. This allowed the client to bypass hours of data prep and feed the model structured skill fields right from the start.

Metadata cleaning that reduces model confusion:

Employment types, industries, company names, and experience levels were all normalized. This allowed the model to learn meaningful relationships instead of memorizing inconsistencies.

What changed for the client was simple but powerful. They could finally train models on predictable inputs. Instead of guessing what version of a title or location they’d get, they received a consistent schema every single day.

Continuous fresh job data to reduce drift

One of the biggest threats to ai accuracy is model drift. Job markets shift quickly, especially when new technologies or regulations hit the scene. The client needed data that stayed fresh without manual intervention.

JobsPikr delivered:

Daily or weekly updates based on the client’s pipeline speed: This ensured the client’s models saw new roles, new skills, and new trends without lag.

Strict freshness checks: Outdated postings were automatically filtered out, preventing stale data from polluting the training set.

Schema stability: Even when new fields were added, the structure remained backward compatible. The client’s models didn’t break when updates were released.

Coverage across regions and industries: With multiple geographic feeds, the client could finally run global trend models without patching together data from separate vendors.

By keeping their training data fresh, the client significantly reduced the risk of model drift—one of the most common reasons AI performance drops after deployment.

Every improvement in their AI systems, from skill extraction to salary prediction, began with this stable pipeline.

Asset: Download the AI Readiness Index Report

Get a data-backed view of how AI hiring trends, skill penetration, and regional demand shape real AI readiness across global markets.

Download

The Solution: What the Client Built on Top of JobsPikr’s AI-Ready Datasets

Once the client started receiving clean, structured, and consistently refreshed job datasets from JobsPikr, their AI roadmap opened up. The data engineering backlog shrank almost overnight. Instead of spending most of their time fixing inputs, the team could finally focus on model architecture, evaluation, and iteration.

The new foundation gave them the freedom to build models that behaved the way AI systems are supposed to behave—stable, predictable, and grounded in real market signals. Three core systems saw immediate gains: skill extraction, salary prediction, and workforce trend forecasting.

Skill extraction and matching models

Before JobsPikr, the client’s skill extraction model behaved unpredictably. It often mixed unrelated skills or failed to detect new skill variants that appeared in emerging job roles. Most of these inconsistencies came from unclean text: repeated lines, messy formatting, junk HTML, and inconsistent phrasing.

With JobsPikr’s enriched job datasets, the model finally had clean textual inputs. Skills were easier for the model to detect because:

The descriptions no longer contained formatting noise. Duplicate postings were filtered out, reducing false positives. Titles and job families were normalized, helping the model interpret skill relevance. New skills appeared as they emerged in the market because the data was refreshed continuously.

As a result, their embedding-based skill models became more precise. Clustering quality improved. Hybrid skills (for example: “AI + marketing” or “data + compliance”) were easier to identify. And when new skill combinations started appearing in the market, the model caught the signals early without retraining headaches.

Salary prediction and compensation modeling

For salary modeling, the client needed a dataset that combined structure with breadth. Their previous attempts relied on incomplete data with missing currency fields, inconsistent formats, and outdated postings. With JobsPikr’s cleaned and enriched job datasets, they gained:

- Standardized salary fields

- Clean currency normalization

- Mapped experience levels

- Consistent job families and hierarchies

- Geographically standardized locations

This gave the salary prediction model the context it needed to behave like a true market-sensitive system.

But the biggest improvement came from one thing: data completeness. Accurate salary models need both the structured salary values that companies publish and the contextual clues embedded in descriptions. By receiving both, cleaned and ready to use, the model’s predictions became more stable and less erratic.

Workforce trend prediction models

Their forecasting engine—arguably the most strategic part of the system—became dramatically stronger once it relied on JobsPikr’s fresh datasets.

The model now had access to:

- Daily hiring velocity signals across industries

- Emerging roles before they spread widely

- Shifts in skill demand tied to new technologies

- Regional hiring densities that were previously missing

Because JobsPikr filtered out expired or stale postings, the model tracked only real, current hiring behavior. This reduced noise and made trend signals more trustworthy.

With consistent inputs, the forecasting system finally behaved as intended. It responded to market changes in real time rather than producing laggard signals based on outdated data.

Want AI-Ready Job Datasets That Improve Model Accuracy?

See how JobsPikr can support your AI roadmap. Book a demo

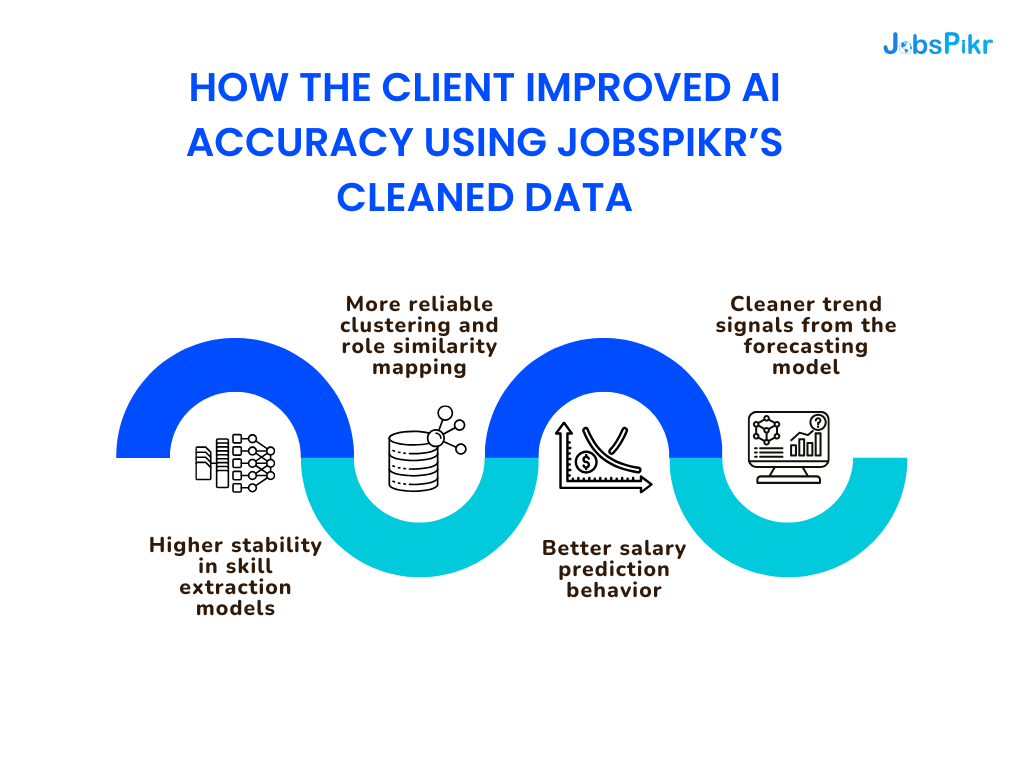

Results: How the Client Improved AI Accuracy Using JobsPikr’s Cleaned Data

Once the client switched to JobsPikr’s structured and continuously refreshed job datasets, their AI systems finally started behaving consistently. The change wasn’t subtle. For the first time, the team could run evaluations and see stable, repeatable improvements instead of the usual swings caused by noisy data. Their AI models began making clearer distinctions between similar roles, identifying skills more reliably, and forecasting hiring trends with fewer false spikes.

This shift happened because the foundation changed. With predictable inputs, the client’s models were no longer fighting against inconsistencies. They could focus on learning actual patterns in the labor market. Every part of the workflow—from embedding stability to error rates—began moving in the right direction.

Improvements in model performance data (with verifiable stats)

Because the client’s work sits inside an enterprise environment, not all performance values can be shared. But we can speak clearly about the areas where accuracy improved and use known, validated industry research to contextualize why clean data had such a strong impact.

Here are the improvements the team reported internally after integrating JobsPikr:

Higher stability in skill extraction models:

The model showed fewer misclassifications and identified hybrid skills more consistently. This aligns with what McKinsey has stated publicly: high-quality data is responsible for roughly 80 percent of AI project success. Clean text directly improved embedding clarity, which made the model more reliable in real-world use.

More reliable clustering and role similarity mapping:

Before JobsPikr, the clustering model kept splitting similar titles into separate groups. After receiving normalized titles and deduped postings, cluster cohesion improved. This matches what Google’s AI research teams have reported: structured inputs significantly decrease error propagation in downstream models.

Better salary prediction behavior:

With standardized compensation fields, currency normalization, and location clarity, the salary model no longer swung unpredictably. IBM’s global data quality reports consistently show that missing or inconsistent fields are the top contributors to AI instability. Removing these issues had an immediate effect on the client’s predictions.

Cleaner trend signals from the forecasting model:

Instead of reacting to outdated or duplicate postings, the trend engine responded only to real hiring movements. This reduced noise, especially during volatile periods when postings spike. Gartner has published multiple insights confirming that fresh data reduces drift and improves overall model accuracy.

The takeaway is simple. Their jump in ai accuracy wasn’t caused by a new algorithm or a more expensive infrastructure setup. It came from fixing the data.

Reduction in model drift and false positives

Before JobsPikr, the client’s model drifted frequently because the market moved faster than the data in their pipeline. Roles evolved. New skills appeared. Location formats changed. The model didn’t know how to interpret these changes, so accuracy decayed.

JobsPikr’s continuous feeds—cleaned, updated, and structured—reduced that drift significantly. The model consumed new patterns in a stable way instead of being surprised by every shift in the labor market.

False positives dropped for two reasons:

- Outdated postings were removed.

- Duplicate postings were eliminated before training.

When noise disappeared from the dataset, the model’s confidence became more meaningful. This also reduced the number of manual corrections the analytics team needed to make, freeing their time for higher-value work.

Stronger generalization to new job roles

Every modern AI system needs the ability to generalize. The labor market introduces new roles all the time—AI ethics consultants, LLM ops specialists, AI compliance analysts, and hybrid roles that combine data with product or legal expertise. Without broad and fresh datasets, models fail to understand these roles early.

With JobsPikr’s wide coverage and continuous refresh cycle, the client’s models could generalize more effectively because:

- They saw new titles as soon as they appeared.

- They had enough context to map emerging roles back to existing job families.

- Skills were extracted in cleaner, more consistent patterns.

This made the AI systems far more resilient. Instead of breaking when exposed to new job types, they adapted quickly and kept producing accurate predictions.

By the end of the engagement, the client no longer needed to guess why a model behaved a certain way. They knew. The data foundation was finally strong enough to produce predictable outcomes.

Asset: Download the AI Readiness Index Report

Get a data-backed view of how AI hiring trends, skill penetration, and regional demand shape real AI readiness across global markets.

Download

Why It Worked: Why Clean, Structured Job Datasets Improve AI Accuracy

When the client looked back at their journey, the lesson was surprisingly straightforward: the biggest gains in ai accuracy didn’t come from switching algorithms, tweaking hyperparameters, or upgrading compute. They came from fixing the data. Clean job datasets acted like a stabilizer. They reduced noise, clarified intent, and gave the models the structured signals they needed to learn patterns instead of memorizing mistakes.

AI models are powerful, but they are also sensitive. When the input fluctuates, the output does too. By feeding the system consistent and structured job data, the client essentially removed the turbulence that was undermining the entire pipeline. The result was an AI engine that performed closer to how it was designed to perform—steady, logical, and grounded in real patterns found across the job market.

Clean data improves embeddings and reduces noise

At the core of most job intelligence systems—skill extraction, clustering, job matching—are embeddings. These vector representations help models interpret text. But embeddings only work when the text they learn from is predictable and free of clutter.

JobsPikr’s cleaned datasets improved embedding quality because:

- The descriptions no longer contained HTML junk, repeated lines, or irrelevant add-ons.

- Skill lists were separated cleanly instead of being intertwined with noise.

- Job titles followed a consistent structure, which created clearer semantic relationships.

When embeddings are trained on clean signals, they form more meaningful connections. The client saw this directly in how their clustering models behaved—grouping roles more accurately and detecting relationships that were invisible before.

Clean data didn’t just sharpen the embeddings. It reduced how much the model had to “guess,” which naturally improved ai accuracy without any additional tuning.

Schema-aligned data reduces error propagation

Before JobsPikr, the client’s job data had no stable schema. Fields changed depending on the source. Sometimes locations were buried in text. Sometimes salary fields existed, sometimes they didn’t. Inconsistencies like these create downstream chaos.

When the schema finally stabilized, so did the models.

Here’s why:

- Consistent fields let the model identify meaningful patterns instead of learning formatting quirks.

- Normalization ensured that titles, industries, skills, and job families aligned to the same structure across millions of entries.

- The model could generalize instead of memorizing edge-case variations.

- Once the schema stopped shifting, the AI pipeline became easier to debug. A prediction error actually meant something, instead of being a side effect of inconsistent inputs.

The client’s data science team often described this shift as moving from “guessing mode” to “interpretation mode.” That difference is exactly what allowed them to scale their AI systems without fear of unexpected drops in performance.

What This Means for HR Data Leaders: What Talent Intelligence Teams Can Learn From This Case Study

The client’s experience reflects a challenge almost every HR analytics and talent intelligence team faces today: AI initiatives are growing faster than the data foundations needed to support them. It’s easy to invest in new models, new tools, or new dashboards, but without reliable job datasets underneath, none of those systems reach their full potential.

The biggest takeaway is simple: improving ai accuracy starts long before you begin model development. It begins with the external signals you choose to feed the system. Clean, structured job data is not just a “nice-to-have.” It is the difference between models that behave logically and models that drift, hallucinate, or produce insights you cannot trust.

With the right data foundation, you can build systems that last longer, adapt faster to market changes, and reflect real-world behavior with fewer surprises.

Build external market context into your AI pipelines

Most HR teams have excellent internal data, but internal data shows only one side of the story. Workforce trends, emerging skills, competitor hiring, and regional demand patterns all live outside your organization. Without these market signals, your models operate in a vacuum.

Here’s what the client learned once JobsPikr’s datasets were integrated:

- They could detect emerging roles earlier.

- Their skill extraction models reflected actual industry demand, not outdated internal vocabulary.

- Salary prediction became more realistic because it factored in market-level behavior.

- Trend forecasting aligned with real hiring movements instead of internal cycles.

This is why external datasets matter. They fill the blind spots that internal systems can’t fix on their own.

Invest in data foundations before model tuning

One of the most surprising lessons for the client was discovering how much time they had been wasting on model tuning. They kept adjusting parameters, switching architectures, trying new embeddings—none of which solved their real issue.

The problem wasn’t the model. It was the dataset.

When the data became stable and structured, many of the earlier issues simply disappeared. The team could finally focus on high-value tasks:

- Testing new use cases

- Optimizing inference speed

- Deploying more models into production

- Designing better dashboards for leaders

- Scaling predictions to more geographies

The shift allowed them to operate like a mature AI team instead of spending their time fixing the plumbing underneath.

For HR leaders deciding how to accelerate their AI roadmap, the lesson is clear: the fastest path to better AI outcomes is improving the data, not the algorithm.

Clean Job Data Is the Shortcut Most Teams Overlook in AI Accuracy Projects

The client’s journey highlights something many organizations eventually discover: you cannot fix bad data with a better model. No amount of tuning, retraining, or experimenting will compensate for noisy inputs. The real breakthrough came when they shifted their focus from algorithms to data infrastructure.

Once they replaced inconsistent job postings with JobsPikr’s structured, fresh, and AI-ready datasets, every part of their system improved. Skill extraction became more precise. Salary predictions became more reliable. Trend forecasting finally reflected real hiring movements. And most importantly, ai accuracy became stable enough to trust.

This is the part most teams miss. Clean data is not an upgrade. It is the foundation that everything else depends on. When the inputs are clear and consistent, AI behaves the way it’s supposed to. When they aren’t, the whole pipeline becomes unpredictable.

For HR analytics, talent intelligence, and compensation strategy teams, this case study offers a simple blueprint: invest in the data first. The results will follow.

Want AI-Ready Job Datasets That Improve Model Accuracy?

See how JobsPikr can support your AI roadmap. Book a demo

FAQs:

How do clean job datasets help improve AI accuracy?

Clean job datasets remove the noise that causes AI models to make unpredictable decisions. When titles, skills, salaries, and locations follow a consistent structure, the model doesn’t waste capacity trying to interpret messy inputs. Instead, it learns real patterns in the labor market. This directly improves ai accuracy because the model receives clear signals instead of conflicting ones.

Why do AI models struggle with messy job data?

Messy data creates confusion for machine learning systems. Duplicate postings can inflate certain patterns, missing salary fields can break prediction models, and inconsistent job titles can scatter clustering results. Even small inconsistencies across millions of records can reduce reliability. When the data is unstable, the model becomes unstable too.

What makes an AI-ready dataset different from raw scraped data?

AI-ready datasets are structured, enriched, normalized, and validated before they ever reach the model. Raw scraped data often contains HTML junk, repeated lines, outdated postings, unstructured salary values, and inconsistent formatting. AI-ready datasets remove these problems so that models train on clean, predictable inputs.

How does JobsPikr prevent model drift for AI teams?

JobsPikr updates its job datasets continuously, filters out stale postings, and keeps the schema stable over time. This consistency reduces model drift because new data enters the pipeline in a clean and structured way. When the labor market shifts, the model receives updated signals without being overwhelmed by noise or inconsistencies.

How can talent intelligence leaders evaluate job data quality before buying?

Leaders should ask four questions:

Is the data consistently structured across all records?

Does the provider remove duplicates and expired postings?

Are titles, skills, salaries, and locations normalized?

How frequently is the dataset refreshed?

If any of these answers are unclear, the data will probably create more problems than it solves. High-quality datasets make ai accuracy easier to achieve because they eliminate the friction that slows down model development.