- **TL;DR**

- How to Choose a Credible Job Data Provider in 2025

- What is Data Provenance and How Does it Apply to Job Posting Data

- Trust the data behind your workforce decisions

- What Makes a Job Data Provider Trustworthy in 2025

- How to Evaluate Data Provenance in Job Data Providers

- Trust the data behind your workforce decisions

- What Data Transparency Should Look Like when Buying Job Data

- How Strong Data Quality Assurance Works in Real Job Data Pipelines

- What Uptime and SLAs Should Look Like for Job Data You Rely On

- Trust the data behind your workforce decisions

- How Security and Anonymization Affect Data Trust in Job Data

- Why Auditability and Compliance Now Define Trusted Job Data Providers

- Buyer Checklist: How to Evaluate a Job Data Provider’s Trust Stack in 2025

- Why the Trust Stack is a Long-Term Advantage, Not Overhead

- How JobsPikr Approaches Trust, Provenance, and Transparency

- Trust the data behind your workforce decisions

-

FAQs

- 1. What is data provenance and why does it matter for job data?

- 2. How can I tell if a job data provider is truly trustworthy?

- 3. What role does data validation play in job posting datasets?

- 4. Why is ethical data collection important if job postings are public?

- 5. What should I prioritize when comparing trusted data providers in 2025?

**TL;DR**

In 2025, a job data provider is only as credible as the trust stack behind the dataset. If you cannot trace where the job data came from, how it was collected, how it was cleaned, and how it changes over time, you cannot rely on the insights either. That is why data provenance has become the core buying signal for serious labor market intelligence teams.

A credible provider should be able to explain their data provenance clearly, show data transparency around coverage and limitations, and prove that data quality assurance and data validation are not afterthoughts. You should expect documented checks for duplicates, reposts, stale listings, missing fields, location ambiguity, and employer normalization issues, because those are the exact problems that break job posting datasets in the real world.

Trust is also operational. A trustworthy job data provider backs their delivery with uptime and freshness SLAs, protects the data with strong security and anonymization practices, and supports auditability so you can defend the dataset during procurement, risk reviews, and compliance checks.

This article gives you a simple framework to evaluate trusted data providers in 2025 and a buyer checklist you can use to compare vendors without getting stuck in marketing claims.

How to Choose a Credible Job Data Provider in 2025

For a long time, job data sat in the background. It powered reports, dashboards, and occasional market scans, but it rarely sat at the center of critical decisions. That has changed.

In 2025, job data feeds everything from hiring strategy and compensation planning to workforce forecasting and AI-driven insights. When leadership asks questions about skills shortages, market expansion, or competitor hiring moves, job posting data is often the first place teams look. The problem is that many teams still treat all job data as if it were equally reliable.

It is not.

AI and analytics amplify bad data fast

Modern analytics tools move quickly. They ingest large datasets, spot patterns, and surface insights in minutes. That speed is powerful, but it also means mistakes scale instantly. If your job data has weak data provenance, hidden duplicates, or stale records, those issues do not stay isolated. They ripple through models, benchmarks, and forecasts.

This matters even more as job data is increasingly used to train or inform AI systems. AI does not pause to question whether a job posting is a repost, a ghost job, or a syndicated duplicate. It assumes the data is correct. When that assumption is wrong, the output looks confident but quietly misleads.

Industry research consistently shows that poor data quality remains one of the biggest blockers to effective analytics and AI adoption. Gartner has repeatedly highlighted data quality and governance as top risks in analytics programs, especially when organizations rely on external data sources they do not fully control. Job data, with its high velocity and constant change, sits right in the danger zone.

“Big coverage” can hide weak foundations

Many job data providers still sell on scale. Millions of postings. Thousands of sources. Global reach. On the surface, that sounds reassuring. But volume alone says nothing about trust.

Large datasets often mask fundamental issues. The same job may appear five or six times across different platforms. Expired roles can linger for months. Company names may fragment across subsidiaries and brand variations. Without strong data quality assurance and data validation, coverage becomes noise.

This is where data transparency becomes a real differentiator. A trusted job data provider is not afraid to talk about where coverage is strong, where it is weaker, and what trade-offs exist. When a vendor avoids these conversations, it is often because the foundation cannot support scrutiny.

Trust gaps show up as business risk, not just messy records

Most teams do not notice trust issues at the dataset level. They notice them when outcomes feel off.

Hiring demand looks inflated compared to reality.

Compensation benchmarks do not match what recruiters see on the ground.

Skill trend reports contradict internal experience.

At that point, teams often rework analyses, adjust assumptions, or blame methodology. But in many cases, the root cause is simpler. The job data provider was not built for trust.

In 2025, trust is no longer a technical detail. It is a business requirement. Procurement teams ask harder questions. Legal teams want to understand ethical data collection practices. Data leaders need to defend where insights came from and why they should be believed.

If a provider cannot clearly explain their data provenance and validation approach, they introduce risk that shows up far beyond the data team.

Pro Tip:If you are evaluating job data vendors right now, one practical step is to ask for a sample dataset along with an explanation of how those records were sourced, validated, and refreshed. The clarity of that response often tells you more than any sales deck.

Job Data Trust Stack Buyer Checklist

What is Data Provenance and How Does it Apply to Job Posting Data

If you are buying job posting data, you are not just buying rows in a table. You are buying a story about the market. And if you cannot trace where that story came from, you are basically trusting a stranger with your decisions.

That is what data provenance is. It is the ability to answer, without hesitation, “Where did this job record come from, how was it collected, and what happened to it before it landed in my dataset?”

Provenance is the receipt for every job posting

The easiest way to think about data provenance is this: it is the receipt you should be able to ask for, at the record level.

Not a vague “we collect from thousands of sources” line. A real explanation for a real job posting.

For one job record, can the job data provider tell you the original source, like whether it came from a company career site, an ATS feed, a job board, or an aggregator that scraped an aggregator? Can they explain the collection method, like whether it was an API pull, a licensed feed, or crawling?

And then the part that matters just as much: can they explain what they did to the record after they collected it?

Because job data does not arrive clean. It gets normalized, deduped, enriched, and validated. Titles get standardized. Companies get resolved. Locations get cleaned. Sometimes two postings get merged because they are the same role. Sometimes one posting gets flagged because it looks stale.

If a provider cannot explain those steps clearly, you do not really have data provenance. You have a black box.

Why Data Provenance Matters Specifically for Job Data

Job posting data is not like a customer list. It changes constantly.

A role gets reposted with a new date. A location line changes from “Remote” to “Hybrid.” A salary range appears after legal review. The same job shows up on five different sites with five slightly different versions of the text.

Now imagine you are using that data for something serious, like skills trend analysis or labor demand intelligence. If your dataset is full of reposts treated as “new jobs,” demand looks like it is rising when it is not. If duplicates are not collapsed properly, your dashboards overcount. If you cannot tell whether a record came directly from an employer or through three layers of syndication, you cannot judge how reliable it is.

This is exactly why data trust starts with data provenance. Without provenance, you cannot confidently separate real market movement from data noise.

Provenance is not the same as a “list of sources”

Vendors love showing source counts. It looks impressive. It also does not prove much.

A list of sources is marketing. Data provenance is accountability.

Here is the difference in real life. If you are looking at a posting for “Senior Data Engineer,” and you want to know whether it is real, current, and unique, you need to know where it originated. A posting pulled directly from a company career site or an ATS-connected feed is generally more dependable than one that has been syndicated across multiple boards and copied around.

A trusted job data provider should be able to tell you which one you are looking at, and why they believe it is the “best version” of that job record.

If they cannot, you will spend months doing that work yourself, or worse, you will ship decisions based on shaky assumptions.

How data provenance becomes the base layer of data trust

If you step back, the whole trust stack depends on this.

Data transparency becomes meaningful only when provenance exists. Data quality assurance is only defensible when you can show what checks ran on what source inputs. Data validation gets stronger when you can trace anomalies back to collection paths. Even compliance conversations become easier when you can prove ethical data collection and record lineage.

This is why, when buyers say they want “trusted data providers,” what they are really asking for is this: can you prove your dataset is legitimate, explainable, and auditable?

And in 2025, the quickest way to test that is simple.

Ask the job data provider to pick one job record from the sample dataset and walk you through its full lineage. Where it came from, how it was collected, what transformations were applied, and how updates are handled over time. If they struggle, the dataset is probably going to struggle too.

Trust the data behind your workforce decisions

When job data informs strategy, it needs to be explainable, auditable, and reliable under pressure. Start with a provenance-first evaluation.

What Makes a Job Data Provider Trustworthy in 2025

Most job data providers sound credible in the first 10 minutes.

They’ll talk about scale. They’ll show a dashboard. They’ll say the words “quality,” “compliance,” and “real-time.” And if you are not careful, you end up picking a vendor because the demo felt polished.

Then two months later your team is arguing over basic things like, “Why is this company showing up as three different employers?” or “Why did demand spike 28% overnight?” or the classic, “Are we sure these are not duplicates?”

That is when you realise what credibility actually means in job data.

A credible job data provider is not the one with the loudest coverage claims. It’s the one whose data holds up when you start asking annoying questions.

Credibility starts when you can challenge the dataset

Here’s the test I like.

Take one job record from the sample dataset. Just one. Pick something common, like “Data Analyst” in London, or “Sales Development Representative” in Austin.

Now ask the provider to walk you through it like you are a skeptical reviewer, not a buyer.

Where did this record originate? Not “we cover thousands of sources.” This record. Did it come from a company career site, an ATS feed, a job board, or did it bounce around through syndication?

How was it collected? API, feed, crawling, partner integration?

What happened after collection? Was it normalized? Did it get deduplicated against other copies? If the title was messy, what did they standardize it to and why? If the company name was inconsistent, how did they resolve it?

If they can answer those questions calmly, with specifics, you are looking at an operation that takes data provenance seriously. If they dodge, generalize, or talk in circles, that is usually a sign you are buying an output without the underlying controls.

That is the first real marker of data trust.

A trustworthy provider is transparent about trade-offs

Good job data is messy by nature. Anyone claiming “perfect coverage” or “100% accuracy” is either exaggerating or not measuring.

The providers you can trust usually have a different vibe. They will tell you where the dataset is strong and where it is weaker. They will explain what they do with edge cases. They will admit constraints, like when certain sources update slowly or when salary parsing is unreliable in specific regions.

That is data transparency, and it matters more than people think.

Because your team is going to build decisions on this. If there are known gaps and you find them later, you will not just lose time. You will lose confidence in the entire dataset. And once that happens, every insight becomes a debate.

Quality is not a promise. It is a repeatable routine

This is where most vendor conversations get fuzzy.

Every provider says they do “QA.” Fine. What does that actually mean for job posting data, which is full of duplicates, reposts, stale roles, and weird formatting?

A credible job data provider has a routine for data quality assurance and data validation that runs whether or not a customer is watching.

They should be able to explain things like:

How they detect duplicates when the same job appears across multiple boards with slightly different text.

How they separate a repost from a genuinely new role, because those are not the same signal.

How they handle freshness, like what “active” means in their system and how they detect stale postings that never got taken down properly.

How they deal with missing or malformed fields, because job data breaks in very predictable ways.

You do not need them to share proprietary code. But you do need a clear explanation that makes sense to a data team and stands up in procurement.

If the provider cannot explain their validation approach in plain language, you are probably going to do the validation yourself later. That is not a partnership. That is a clean-up project you pay for.

Reliability, security, and auditability are part of trust, not add-ons

Job data is often public, but the way it is delivered and governed is still a serious enterprise issue.

If delivery is inconsistent, you cannot run intelligence workflows properly. If SLAs are vague, you will always be guessing whether gaps are “normal.” If security is an afterthought, your procurement team is going to slow everything down, or block the vendor completely.

And auditability matters more in 2025 than it did a few years ago. Teams want to know: if a metric changes, can we trace why? If a record was corrected, can we see what changed and when? If legal asks how the data was collected, can we show ethical data collection practices instead of hand-waving?

That is the difference between “a dataset” and a trusted data provider.

Job Data Trust Stack Buyer Checklist

How to Evaluate Data Provenance in Job Data Providers

This is the part most buyers skip. Not because it isn’t important, but because it feels uncomfortable to push vendors this hard.

You shouldn’t skip it.

If data provenance is weak, everything else you build on top of the dataset becomes fragile. And the only way to know whether provenance is real or just a slide in a deck is to ask very specific questions and listen carefully to how they’re answered.

Where job postings actually come from, and why it matters

Not all job data sources are equal, even though they often get lumped together.

Some job postings originate directly from company career sites or ATS platforms. These tend to be the cleanest and most reliable because they are closest to the employer. When a role is updated or taken down, the change usually reflects reality quickly.

Others come from job boards and aggregators. These add distribution, but they also introduce lag, formatting changes, and duplication. By the time a posting shows up here, it may already be copied elsewhere.

Then there are syndication chains. One posting gets published, picked up by a board, copied by another platform, scraped again, and redistributed. At that point, it becomes hard to tell whether you are looking at a fresh role or a recycled one.

A job data provider that understands data provenance can tell you which of these paths a record followed. One that doesn’t will treat them all the same, which is how inflated demand and false trends creep in.

Lineage matters more than source count

You will often hear vendors talk about “thousands of sources.” That sounds impressive, but it is not the question you should be asking.

The real question is lineage. For a given job posting, can the provider tell you the original source and the path it took through their system?

Did the record start at a company career page and flow directly into the dataset, or did it come through two or three intermediaries? Was it merged with other copies? Was it updated when the original changed?

This is what data provenance looks like in practice. It is not about how many places data might come from, but about whether each record has a clear and defensible history.

If a provider cannot trace lineage at the record level, you are effectively blind when something looks off later.

Transformation steps should be explainable, not mysterious

Every job posting gets changed after collection. That is not a problem. It is necessary.

Titles get cleaned up. Locations get standardized. Companies get normalized. Duplicates get collapsed. Sometimes fields get dropped because they are unreliable.

The issue is not that transformations happen. The issue is when they happen invisibly.

A trusted job data provider should be able to explain, in plain terms, what transformations they apply and why. Not in marketing language. In operational language.

For example, if two postings look similar, how do they decide whether to merge them or keep them separate? If a salary range looks unrealistic, do they flag it, fix it, or discard it? If a posting keeps getting refreshed every 30 days with no real change, how is that handled?

These decisions shape the dataset more than people realize. Provenance includes these choices, not just the original scrape.

Provenance questions that reveal vendor maturity

Here are a few questions that tend to separate mature providers from everyone else:

Can you show me one job posting and walk me through its full history, from source to delivery?

How do you distinguish a repost from a genuinely new role?

If a job posting changes after first ingestion, how do you reflect that in the data?

Do you keep raw versions alongside normalized records, and for how long?

Strong providers answer these calmly and consistently. Weak ones pivot to high-level statements or avoid specifics.

That difference matters, because when your internal stakeholders challenge the numbers, you will need answers that go deeper than “that’s how the data looks.”

Pro Tip: If you are actively comparing vendors, ask each of them to do the same provenance walkthrough using the same type of role. The contrast is usually obvious within minutes.

Trust the data behind your workforce decisions

When job data informs strategy, it needs to be explainable, auditable, and reliable under pressure. Start with a provenance-first evaluation.

What Data Transparency Should Look Like when Buying Job Data

Transparency is one of those words everyone uses and very few people define. In job data, it often gets reduced to surface-level claims like “we’re transparent about our sources” or “we provide full coverage details.” That sounds reassuring, but it doesn’t tell you what you need to know.

Real data transparency is not about how much information a provider shares. It is about whether they are willing to show you the parts of the dataset that are inconvenient.

Coverage transparency without vague promises

Every job data provider has uneven coverage. That is normal. Different regions behave differently. Some industries post heavily on career sites. Others rely on boards. Some markets refresh postings daily. Others lag for weeks.

A transparent job data provider will tell you this upfront.

They will explain where coverage is strong and where it thins out. They will tell you if certain geographies skew toward syndicated data, or if some job families are underrepresented because employers rarely post them publicly. They will not hide behind a single global number.

When coverage transparency is missing, teams discover gaps the hard way. A dashboard looks solid until someone filters by a specific region or role and sees odd drops or spikes. At that point, trust erodes quickly, even if the rest of the data is usable.

Transparency is not about perfection. It is about setting accurate expectations.

Freshness and update cadence should never be a mystery

Job posting data ages fast. A role posted six weeks ago may already be filled, paused, or rewritten internally. That makes freshness one of the most important trust signals in job data.

A trustworthy provider should be able to tell you, clearly, how often different sources update and how freshness is measured in their system. Not “near real-time” or “frequently,” but something concrete.

Do company career sites refresh daily while boards lag? How long does it take for an update or takedown to reflect in the dataset? What happens when a posting stops changing but never formally expires?

These details matter because they affect how you interpret trends. Without freshness transparency, teams often treat old demand as current demand and make decisions on outdated signals.

Being honest about limitations is a trust signal

This is counterintuitive, but the most trustworthy job data providers talk openly about what their data cannot do.

They will tell you where salary data is unreliable because employers avoid disclosure. They will explain that not all postings include structured skills. They will flag that remote roles may still have hidden geographic constraints.

This kind of honesty builds data trust because it lets teams adjust their analysis instead of discovering problems midstream.

When a provider claims their dataset supports every use case equally well, that is usually a red flag. Job data is powerful, but it has known blind spots. A transparent provider helps you work around them rather than pretending they do not exist.

Field-level transparency matters more than people expect

Some fields in job data are harder than others. Compensation, location, and seniority are the usual trouble spots.

A transparent job data provider will explain how confident they are in these fields, how they validate them, and how often they fail. They may even provide confidence scores or flags so you can decide how much weight to give each record.

This level of transparency is especially important when job data feeds automated systems or executive reporting. If everything looks equally “clean” on the surface, teams lose the ability to judge risk.

Pro Tip: If you want to test transparency during evaluation, ask the provider to point out three known weaknesses in their dataset and explain how they mitigate them. The answer tells you a lot about whether transparency is cultural or just cosmetic.

How Strong Data Quality Assurance Works in Real Job Data Pipelines

This is where trust usually breaks or holds.

Most teams don’t lose confidence in a job data provider because of one bad record. They lose confidence because small issues keep repeating. Counts don’t line up. Trends feel inflated. The same role shows up again and again under slightly different names. Over time, people stop trusting the numbers, even if they can’t point to a single smoking gun.

That almost always traces back to weak or inconsistent data quality assurance.

Quality assurance is not a cleanup step

One of the biggest misconceptions about job data is that quality can be fixed at the end. Collect everything first, clean it later. That approach fails quickly at scale.

Job posting data is noisy from the moment it is created. Employers repost roles. Platforms reformat content. Locations get abbreviated. Salaries appear in text instead of fields. If quality checks are not built into the pipeline, problems compound silently.

A credible job data provider treats data quality assurance as a continuous system, not a batch process. Checks run automatically, repeatedly, and predictably. They do not depend on someone noticing a problem downstream.

When you ask a provider about QA, listen for whether they describe routines or reactions. Routines build trust. Reactions don’t.

Deduplication is where most providers struggle

Duplicates are the single biggest issue in job posting datasets, and they are rarely obvious.

The same job can appear across a company career site, three job boards, and two aggregators. The text will look slightly different each time. Titles get tweaked. Bullet points move around. One version says “Remote,” another says “Hybrid.”

A weak system treats these as separate jobs. A strong one recognises them as the same role and collapses them into a single record.

This is not trivial, and it is where data quality assurance really shows. A trustworthy job data provider should be able to explain how they identify duplicates, how confident they are in that process, and what happens when the system is unsure.

Just as important, they should explain how they handle reposts. A repost is not the same as a new role, but it is also not meaningless. Treating reposts incorrectly is one of the fastest ways to inflate demand trends.

Normalization decisions shape every insight

Normalization sounds technical, but it has very real consequences.

If “Sr Software Eng,” “Senior Software Engineer,” and “Software Engineer III” are treated as different roles, your role-level analysis becomes fragmented. If “NY,” “New York,” and “New York City” are not resolved consistently, geographic trends get distorted.

Strong data quality assurance includes clear normalization rules that are applied consistently. It also includes the ability to revisit those rules as markets evolve. Titles change. New roles appear. Skills shift.

A provider that cannot explain how normalization works, or how often it is reviewed, is usually hardcoding assumptions that age badly.

Validation is about preventing nonsense before it spreads

Data validation is the guardrail that stops bad data from becoming insight.

In job data, validation often includes checks like whether salary ranges fall within realistic bounds, whether locations map to real places, whether required fields are present, and whether dates make sense. These checks sound basic, but they catch a surprising amount of noise.

What matters is not just that validation exists, but what happens when records fail. Are they flagged? Removed? Corrected? Left in the dataset with no warning?

A trusted job data provider can tell you how often records fail validation and what the default response is. That transparency is part of data trust. If everything passes all the time, something is wrong.

Human review still matters, even in automated systems

Automation is essential for scale, but job data still benefits from human judgment in specific places.

Edge cases, high-impact employers, and new role types often need review. The best providers combine automated QA with targeted sampling and human oversight. Not everywhere, not always, but where it matters.

This hybrid approach is another signal of maturity. It shows that the provider understands where automation is strong and where it needs backup.

Pro Tip: If you want to pressure-test QA during evaluation, ask one simple question: “What percentage of records fail your quality or validation checks, and what happens to them?” A real answer here usually separates robust systems from hand-waving.

Job Data Trust Stack Buyer Checklist

What Uptime and SLAs Should Look Like for Job Data You Rely On

This is the part almost everyone underestimates at the start.

When teams evaluate a job data provider, they focus heavily on what the data looks like. Coverage, fields, structure, quality. All of that matters. But once the data is wired into dashboards, models, and internal workflows, a different question becomes far more important.

Can we rely on this data showing up, on time, every time?

That is where uptime and SLAs stop being operational details and start becoming trust signals.

Uptime is not an API metric; it is an insight metric

If your job data arrives late, your insights are late. There is no way around that.

A missed delivery window can throw off weekly hiring reports. A delayed update can make a hiring surge look like it happened days later than it actually did. If you are using job data to monitor competitor activity or fast-moving skill demand, even small delays matter.

A credible job data provider understands this. They do not talk about uptime only in terms of API availability. They talk about delivery reliability, freshness guarantees, and how often data arrives when you expect it to.

You should be able to ask simple questions and get clear answers. How often is data delivered? What percentage of deliveries meet that timeline? How is lateness measured and reported?

If the answers are vague, expect surprises later.

SLAs should be specific enough to be testable

Many SLAs sound good on paper but are useless in practice.

Phrases like “best effort,” “near real-time,” or “high availability” feel comforting but do not give you anything you can actually measure or enforce. A trustworthy job data provider commits to specific expectations and is comfortable putting numbers behind them.

That does not mean every SLA has to be extreme. It means they should be clear.

For example, how quickly are new postings reflected in the dataset after publication? How long does it take for an update or takedown to propagate? What is the guaranteed uptime window over a month?

These commitments matter because they shape how your team designs workflows. Without them, you are forced to build defensive logic everywhere, which slows everything down.

Incident handling reveals how seriously reliability is taken

No system is perfect. Things break. Sources change. Feeds go down.

The difference between a reliable provider and an unreliable one is not whether incidents happen. It is how they are handled.

A credible job data provider has a defined incident response process. They can explain how issues are detected, how customers are notified, and how fixes are communicated. They can also explain whether missed data is replayed or backfilled, or whether gaps simply remain.

This matters more than most buyers realise. Silent failures erode trust faster than visible ones. If your team has to discover gaps on their own, confidence in the entire dataset starts to slip.

Backfills and replay support are not optional

Job data pipelines are long-running systems. Mistakes happen. A bug gets fixed. A validation rule changes. A source improves.

When that happens, the question is simple. Can the provider replay or backfill data so your historical views stay consistent?

Providers that cannot do this often leave customers with fractured datasets where logic changed midstream. That makes trend analysis painful and forces teams to explain why last quarter’s numbers no longer line up.

A trusted job data provider plans for this. They treat replay and backfill as part of reliability, not as special favors.

Pro Tip: If you’re evaluating job data providers right now, one useful step is to request a small sample dataset and ask for a walkthrough of how those records were sourced, validated, and maintained. The contrast between vendors usually becomes clear very quickly.

Trust the data behind your workforce decisions

When job data informs strategy, it needs to be explainable, auditable, and reliable under pressure. Start with a provenance-first evaluation.

How Security and Anonymization Affect Data Trust in Job Data

Job postings are public, so security is often treated as a checkbox. That is a mistake.

While the content itself may be visible on the open web, the way job data is collected, processed, stored, and delivered still creates real risk. In 2025, security and anonymization are no longer just IT concerns. They are part of whether a job data provider can be trusted at all.

Public data does not mean careless handling

This is a common misunderstanding. Yes, job postings are public. No, that does not mean a provider can be casual about how they handle them.

A credible job data provider treats job data like any other enterprise dataset. Access is controlled. Delivery endpoints are secured. Internal systems follow least-privilege principles. Logs exist to show who accessed what and when.

This matters because job data is rarely consumed in isolation. It flows into internal analytics systems, planning tools, and sometimes AI pipelines that touch sensitive business decisions. If the provider’s security posture is weak, that risk transfers to you.

Procurement and security teams know this. That is why they increasingly ask for documentation, audits, and evidence, even when the data itself is public.

Anonymization is about responsibility, not secrecy

Anonymization in job data is subtle. It is not about hiding employer names or removing obvious identifiers. It is about protecting patterns and signals that could be misused if handled irresponsibly.

For example, raw crawl logs can expose platform behavior. Overly granular timestamps can reveal internal hiring processes. Poorly handled metadata can make it easy to reverse-engineer collection methods in ways that violate platform rules.

A trusted job data provider is careful about what they expose and to whom. They separate raw ingestion artifacts from customer-facing datasets. They think about how data could be combined or misinterpreted downstream.

This is part of ethical data collection, even if it does not get marketed that way.

Security maturity shows up in how vendors answer simple questions

You do not need a deep security background to evaluate this layer. You just need to listen carefully.

- Can the provider explain how access is controlled for your data?

- Do they offer different access levels for different users or systems?

- Can they show audit logs or at least describe how activity is tracked?

Clear, calm answers usually signal real systems. Hesitation or deflection often signals that security was bolted on late.

In 2025, many deals stall not because of pricing or features, but because security reviews raise questions vendors cannot answer cleanly. That alone should tell you how important this layer has become.

Responsible handling builds long-term trust

Security and anonymization are not just about passing reviews. They affect long-term trust.

When providers handle data responsibly, customers feel more confident expanding use cases. Job data moves from exploratory analysis into core planning workflows. That only happens when teams believe the dataset will not create surprises under scrutiny.

A job data provider that takes security seriously rarely needs to oversell it. Their documentation, processes, and posture speak for themselves.

Pro Tip: If you want a practical check, ask the provider what security review questions they typically receive from enterprise buyers and how they address them. The familiarity of that conversation tells you whether security is embedded or improvised.

Job Data Trust Stack Buyer Checklist

Why Auditability and Compliance Now Define Trusted Job Data Providers

This is usually where the conversation changes tone.

Up to this point, most discussions about job data feel technical. Sources, quality, delivery, security. Then procurement or legal gets involved, and suddenly the question becomes much simpler and much harder at the same time.

Can we defend this data?

Auditability and compliance are what allow you to answer that question without hedging.

Auditability is about being able to explain change

Job data is not static. Records get updated. Duplicates get merged. Validation rules evolve. Sources get added or removed.

When someone looks at a chart six months later and asks why a number changed, “the data updated” is not a sufficient answer.

A trusted job data provider can show what changed, when it changed, and why. That might be through versioned datasets, change logs, or lineage tracking. The exact implementation varies, but the principle is the same. Changes should be traceable, not mysterious.

This matters more than people expect. When teams cannot explain change, confidence in the entire dataset drops, even if the current data is technically better than before.

Compliance is no longer a regional issue

In the past, compliance questions around job data were often brushed aside because the data was public. That argument does not hold as well in 2025.

Data collection practices are being scrutinized more closely across regions. Ethical data collection is no longer just about legality. It is about whether the provider respects platform terms, avoids abusive collection behavior, and documents how data is gathered and used.

Buyers increasingly want to know whether a provider can explain their approach in plain language, not just point to legal disclaimers. This includes how robots directives are handled, how rate limits are respected, and how takedown requests are processed.

A provider that treats compliance as a living practice rather than a legal footnote is easier to work with long-term.

Documentation reduces friction across teams

One of the underrated benefits of auditability is internal alignment.

When data provenance, validation processes, and compliance practices are documented, conversations with procurement, legal, security, and leadership become smoother. You are not improvising explanations or chasing answers across emails.

Trusted data providers understand this and make documentation part of the product experience, not something you only see during contract negotiations.

This does not mean overwhelming buyers with paperwork. It means having clear, consistent explanations ready when questions come up.

Why auditability protects you as much as the provider

At the end of the day, auditability is not about catching mistakes. It is about protecting decision-makers.

When your analysis is questioned, you want to be able to say, with confidence, where the data came from, how it was handled, and why it is reliable. Auditability gives you that footing.

That is why audit-ready job data providers are increasingly seen as lower risk, even if they are not the cheapest option. They reduce uncertainty when it matters most.

Pro Tip: If you want to test this layer during evaluation, ask the provider how they handle corrections or disputes about the data. The ability to walk through that process calmly is often the clearest sign of maturity.

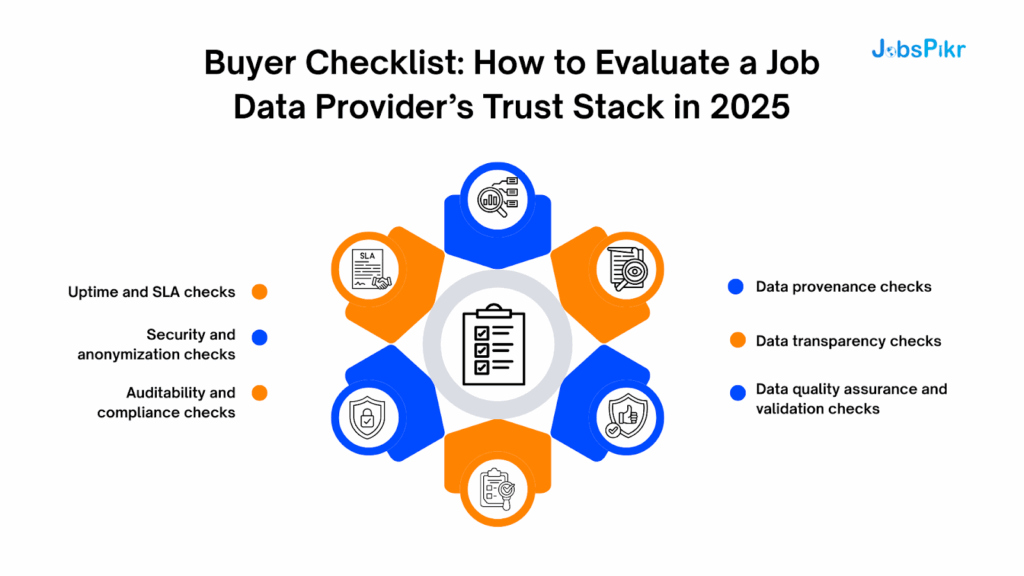

Buyer Checklist: How to Evaluate a Job Data Provider’s Trust Stack in 2025

At this point, you have the concepts. Now comes the part that helps during vendor evaluation.

This checklist is not meant to catch vendors out. It is meant to help you compare them on the things that quietly determine whether a dataset will hold up six months after you sign, not just during the demo.

You do not need perfect answers to every question. What you are looking for is clarity, consistency, and the ability to explain decisions without slipping into vague language.

Data provenance checks

Start here. Everything else builds on this.

Ask the provider to pick a real job posting from the sample dataset and walk you through it end to end. You should understand where it originated, how it was collected, and how it moved through their system. If provenance exists only at a high level and not at the record level, trust will be hard to maintain.

You should also ask whether raw records are retained alongside normalized ones. This matters when you need to verify assumptions or revisit edge cases later. Provenance is strongest when the original context is not lost.

Data transparency checks

Transparency shows up in how comfortable a provider is discussing limitations.

Ask where coverage is strongest and where it is weaker. Ask how freshness varies by source type. Ask which fields are most error-prone and how those errors are handled.

A trustworthy job data provider does not need to present the dataset as perfect. They need to present it as understandable. If transparency feels selective or overly polished, you will likely discover gaps on your own later.

Data quality assurance and validation checks

This is where you move from philosophy to mechanics.

Ask how duplicates are detected and collapsed, especially across syndication networks. Ask how reposts are identified and differentiated from new roles. Ask how stale postings are handled when they never get formally taken down.

You should also ask what happens when records fail validation. Are they removed, flagged, corrected, or left untouched? There is no single “right” answer, but there should be a clear one.

Quality assurance should sound like a routine, not a rescue operation.

Uptime and SLA checks

Reliability questions often get postponed until after selection. That is a mistake.

Ask what delivery timelines are guaranteed and how often they are missed. Ask how incidents are communicated. Ask whether backfills or replays are supported when issues are fixed.

You are not just buying data. You are buying consistency. If SLAs are vague or difficult to measure, you will spend a lot of time compensating for that uncertainty downstream.

Security and anonymization checks

Even though job postings are public, enterprise teams still need confidence in how data is handled.

Ask about access controls, audit logs, and delivery security. Ask how sensitive metadata and internal signals are protected. Ask what documentation is available for security and compliance reviews.

Providers that treat security seriously usually have answers ready. Providers that do not will slow your process down later.

Auditability and compliance checks

Finally, ask how the provider handles change.

If a record is corrected, can they show what changed and why? If validation logic evolves, how is that communicated? If a source is added or removed, how does that affect historical data?

Auditability is what lets you defend your analysis when someone challenges the numbers. Without it, every insight becomes fragile.

Before you shortlist any vendor, ask them to complete this checklist using their own dataset and walk you through one or two real examples. The exercise itself usually reveals more than any comparison table.

Job Data Trust Stack Buyer Checklist

Why the Trust Stack is a Long-Term Advantage, Not Overhead

It’s tempting to see all this as extra work.

More questions. More documentation. More scrutiny. When you are under pressure to ship dashboards or deliver insights quickly, it can feel easier to accept a dataset that “looks fine” and move on.

That trade-off almost always comes back to bite.

Trust compounds, bad data debt compounds faster

When job data is trustworthy, teams stop second-guessing it. Analysts spend less time debugging numbers and more time answering real questions. Leaders stop asking “are we sure?” and start asking “what should we do next?”

That shift is subtle, but powerful.

Strong data provenance means fewer debates about where numbers came from. Good data transparency reduces surprises. Consistent data quality assurance prevents slow erosion of confidence. Reliable SLAs keep insights aligned with reality. Auditability makes reviews and approvals smoother.

Over time, the dataset becomes infrastructure, not an experiment.

Low-trust data does the opposite. Every new use case requires caveats. Every trend needs explaining. Every meeting starts with defending the data before discussing the insight. That is data debt, and it compounds quietly.

Why trust matters more as job data moves closer to decisions

In many organizations, job data starts as “interesting.” Then it becomes “useful.” Eventually, it becomes operational.

- It informs where to hire.

- It shapes compensation bands.

- It signals which skills to invest in.

- It feeds models that recommend actions automatically.

At that stage, the question is no longer whether the data is directionally right. The question is whether you can stand behind it when the outcome matters.

That is why trusted data providers tend to last longer in stacks. They are easier to defend, easier to expand, and easier to integrate into serious workflows.

Choosing a job data provider is really choosing a risk profile

Every vendor choice carries risk. The mistake is assuming that all job data providers carry the same kind of risk.

Some risks are visible. Pricing. Coverage gaps. Feature limits. Those can be negotiated or worked around.

Trust risk is different. It shows up later, when decisions are questioned and the dataset cannot explain itself. By then, switching costs are high and credibility is already damaged internally.

In 2025, the safest choice is not the provider with the loudest claims. It is the one that is comfortable being challenged.

How JobsPikr Approaches Trust, Provenance, and Transparency

JobsPikr was built around the assumption that job data will be questioned. By data teams. By leadership. By procurement. Sometimes by regulators.

That is why the platform emphasizes clear data provenance, documented collection practices, repeatable data quality assurance, and validation routines designed specifically for job posting data. Transparency around coverage, freshness, and limitations is treated as part of delivery, not an exception.

The goal is not to present job data as perfect, but to make it explainable, auditable, and reliable enough to support real decisions.

Pro Tip: If you’re evaluating job data providers right now, one useful step is to request a small sample dataset and ask for a walkthrough of how those records were sourced, validated, and maintained. The contrast between vendors usually becomes clear very quickly.

Trust the data behind your workforce decisions

When job data informs strategy, it needs to be explainable, auditable, and reliable under pressure. Start with a provenance-first evaluation.

FAQs

1. What is data provenance and why does it matter for job data?

Data provenance explains where a job posting came from, how it was collected, and what happened to it before it reached your dataset. For job data, this matters because postings are frequently duplicated, reposted, or updated across platforms. Without clear provenance, it becomes hard to tell whether trends reflect real hiring activity or data noise.

2. How can I tell if a job data provider is truly trustworthy?

A trustworthy job data provider can explain their data in plain language. They can trace individual records back to their original sources, describe how duplicates and reposts are handled, and show how validation and quality checks run continuously. If answers rely on vague claims rather than specifics, trust is usually weak.

3. What role does data validation play in job posting datasets?

Data validation acts as a guardrail. It prevents incomplete, unrealistic, or inconsistent job records from flowing into analysis. For example, validation checks help catch broken locations, missing fields, or salary ranges that do not make sense. Without strong validation, errors spread quickly into dashboards and models.

4. Why is ethical data collection important if job postings are public?

Even though job postings are publicly visible, how they are collected still matters. Ethical data collection respects platform rules, avoids abusive scraping behavior, and documents collection practices clearly. Providers that treat ethics seriously tend to produce more stable, defensible datasets over time.

5. What should I prioritize when comparing trusted data providers in 2025?

Start with data provenance, then evaluate transparency, quality assurance, validation routines, reliability, and auditability. Coverage volume alone is not enough. The best trusted data providers are those that can explain their data calmly, consistently, and under scrutiny.