- **TL;DR**

- How Does Data Anonymization Shape Trust in Labor Market Intelligence?

- Build Labor Market Intelligence You Can Trust

- What Makes Labor Market Data Sensitive Even When It Is Public?

- How Is Labor Market Data Anonymized Before It Is Shared Or Analyzed?

- What Data Anonymization Techniques Are Used For Job And Labor Data?

- Build Labor Market Intelligence You Can Trust

- How Does Encryption Protect Labor Market Data Throughout Its Lifecycle?

- How Is Labor Market Data Stored Securely At Scale?

- How Do Data Privacy Principles Influence Labor Data Architecture?

- How Do You Keep Labor Market Data Useful After Anonymization?

- Build Labor Market Intelligence You Can Trust

- How Does Transparency Build Trust In Labor Market Data Providers?

- What Should Teams Look For When Choosing A Secure Labor Market Data Provider?

- Why Trust And Intelligence Must Move Together In Labor Market Data

- Build Labor Market Intelligence You Can Trust

- FAQs

**TL;DR**

Labor market data is only valuable if teams can trust it. At JobsPikr, that trust comes from how we handle data anonymization, data security, and data privacy at every step of the pipeline. We work exclusively with public job data, but we do not treat “public” as a free pass. Job data anonymization is built into how labor data is collected, processed, encrypted, stored, and shared, so no individual or organization can be identified or reverse-engineered from the dataset.

We remove direct identifiers early, aggregate labor market data to prevent inference, and apply proven data anonymization techniques that protect privacy without stripping away analytical value. Encryption safeguards data during transfer and storage, while strict access controls and monitoring keep usage accountable. The result is labor market data that stays anonymous, secure, and still useful for workforce planning, skills analysis, and market intelligence.

This article explains, in plain language, how data anonymization works, why encryption and storage security matter, and how ethical design choices keep labor data safe while preserving the insights people analytics and data engineering teams need.

How Does Data Anonymization Shape Trust in Labor Market Intelligence?

Image Source: Lanteria

If you have ever tried to get an external dataset approved internally, you know how this goes.

A people analytics lead gets excited about labor market data. A data engineering lead asks where it comes from. Someone from security or legal joins the thread. And then the real question lands, quietly but firmly: “Are we sure this is safe?”

That is the moment data anonymization stops being a “nice to have” and becomes the entire point because labor market data is only useful if your team can use it with confidence, not with crossed fingers.

JobsPikr’s approach is simple: we treat job data anonymization, data privacy, and data security as part of the product, not a slide at the end of a deck. When anonymization is real and consistent, trust follows. When it is vague, everyone hesitates.

Labor market data ends up in decisions that people can feel

Labor market data is not a vanity dataset. Teams use it to plan hiring, benchmark skills, understand role demand, and spot shifts in compensation pressure. Those decisions affect budgets, teams, and sometimes real careers.

So the bar is higher. If a dataset even looks like it could expose individuals, recruiter identities, or sensitive patterns tied to a specific employer, it becomes hard to justify using it. Strong data anonymization removes that friction. It keeps the conversation focused on analysis, not on risk.

Scale changes the privacy equation, even for public data

Here is the part many teams miss at first: “public data” does not automatically mean “safe to aggregate forever.”

A single job post on a career page is one thing. A large, cleaned, searchable dataset of labor market data is another. When you collect at scale, you can accidentally make it easier to infer things that were never obvious in the original source. That is why job data anonymization has to account for how fields combine, how patterns appear, and how datasets get used downstream.

And this is not just a niche worry. Pew Research Center reports that concern about data use is high, including 81% of U.S. adults who say they are very or somewhat concerned about how companies use the data they collect about them. When people are already uneasy about data practices in general, trust in labor data sources has to be earned, not assumed.

Trust is what decides whether teams adopt labor market data

In evaluations, teams are not only comparing coverage or freshness. They are asking whether the dataset will survive internal scrutiny.

If anonymization practices are clear, repeatable, and built into the pipeline, adoption becomes easier. Data engineering teams can integrate the feed. Security teams can review controls. People analytics teams can share insights internally without worrying they are carrying hidden privacy debt.

That is what “useful” means here. Labor market data that is not only rich and well-structured, but also protected through data anonymization, data encryption, and secure handling practices from day one.

Build Labor Market Intelligence You Can Trust

See how JobsPikr delivers anonymized, secure labor market data that people analytics and data engineering teams can confidently use for workforce planning, skills analysis, and market benchmarking.

What Makes Labor Market Data Sensitive Even When It Is Public?

This is where a lot of confusion starts, especially for teams seeing labor market data for the first time.

On the surface, job postings feel harmless. They sit on public career pages. Anyone can read them. So, the natural reaction is to assume there is no real privacy or security risk involved. The sensitivity of labor data does not come from a single posting. It comes from what happens when thousands or millions of postings are collected, standardized, and analyzed together.

Labor market data becomes sensitive because of context, scale, and reuse, not because it contains obvious personal information.

Public Job Data Is Not The Same As Personal Data, But It Still Needs Protection

JobsPikr works with public data. That means job listings published openly by employers, staffing firms, and job boards. We do not collect resumes, candidate profiles, or application data. We do not track individual job seekers.

Even so, public data can still carry risk if it is handled carelessly. Job postings often include indirect signals such as team structure, hiring urgency, location strategy, or technology adoption. When combined across time and companies, those signals can reveal patterns that were never intended to be obvious.

This is why data anonymization still matters, even when the original source is public.

Aggregation Changes How Labor Data Behaves

A single job posting is just an announcement. A large labor market dataset is intelligence.

When labor data is aggregated, normalized, and enriched, it becomes far more powerful than its raw form. Titles are standardized. Locations are mapped. Skills are extracted. Trends emerge. That transformation is exactly what makes labor market data useful for analytics, but it is also what introduces sensitivity.

Without proper job data anonymization, aggregated datasets can make it easier to infer internal hiring strategies, organizational changes, or regional expansion plans tied to specific employers. The goal of data anonymization is to preserve insight at the market level, while preventing inference at the individual or organizational level.

Indirect Identifiers Create Risk At Scale

Most people think of privacy risk as names, emails, or phone numbers. In labor market data, the risk is more subtle.

Job title combinations, niche skill stacks, posting frequency, and specific location patterns can act as indirect identifiers when viewed together. On their own, these fields are harmless. Combined and tracked over time, they can become revealing.

This is why data anonymization techniques go beyond simply removing obvious identifiers. They focus on how fields interact, how granular the data should be, and how much detail is truly necessary for analysis.

Sensitivity Depends On How Data Is Used Downstream

Another overlooked factor is reuse. Labor data rarely lives in one place. It flows into dashboards, forecasting models, internal reports, and sometimes customer-facing insights.

Each downstream use increases exposure if the data is not properly anonymized and secured. Data privacy is not just about collection. It is about making sure that once labor data leaves the source system, it still behaves safely wherever it travels.

That is why data anonymization, data security, and data encryption have to be applied with downstream use in mind, not just ingestion.

How Is Labor Market Data Anonymized Before It Is Shared Or Analyzed?

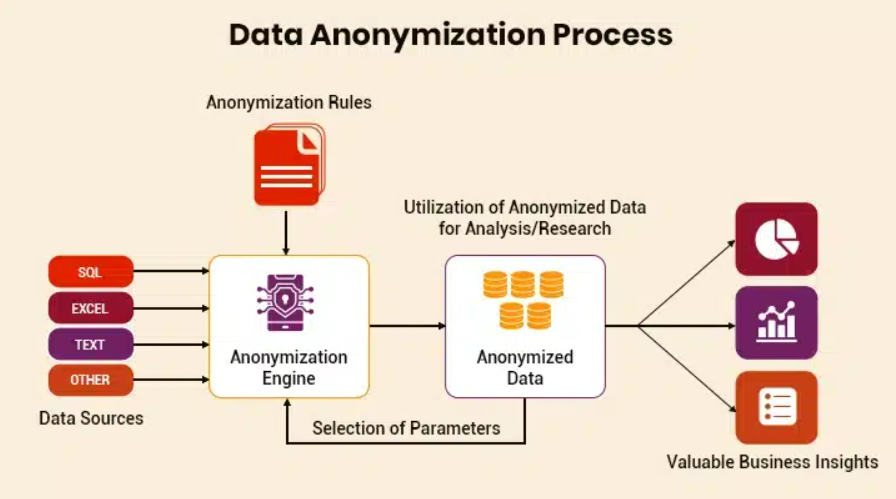

Image Source: Damcogroup

Anonymization is not something that gets sprinkled on top of data right before delivery. If that were the case, it would break the moment the data starts moving through real systems.

At JobsPikr, data anonymization is treated as a pipeline decision, not a formatting step. The goal is simple but strict: by the time labor market data is stored, queried, or shared, it should no longer be possible to trace it back to any individual person or expose sensitive signals tied to a specific entity.

That work starts earlier than most people expect.

Direct Identifiers Are Removed At The Source

The first step in job data anonymization is also the most obvious one. Anything that could directly identify an individual is excluded before it ever becomes part of the dataset.

That includes names, email addresses, phone numbers, recruiter contact details, and application-specific identifiers that sometimes appear in job listings. These fields are not masked or partially hidden. They are not ingested at all.

This matters because downstream controls are never perfect. If sensitive fields are never collected, they cannot leak, be misused, or accidentally reappear in analytics layers later on.

Indirect Identifiers Are Handled Through Aggregation

Direct identifiers are only part of the problem. In labor market data, the bigger risk often comes from indirect identifiers.

Fields like job title, skill combinations, posting frequency, and location granularity can become identifying when viewed together, especially in smaller markets or niche roles. A single posting may not raise flags, but patterns across time can.

To manage this, labor data is aggregated and normalized in ways that preserve market-level insight while reducing the risk of inference. Titles are standardized. Locations are mapped to appropriate geographic levels. Posting timelines are analyzed in ranges rather than exposed as raw event streams.

The point is not to blur the data until it is useless. The point is to make sure insights stay statistical, not traceable.

Granularity Decisions Matter More Than Most Teams Realize

Granularity is where anonymization quietly succeeds or fails.

Highly granular data feels attractive because it promises precision. But unnecessary precision is often where privacy risk creeps in. JobsPikr’s anonymization approach evaluates whether a given level of detail actually improves analysis. If it does not, it gets abstracted.

That is how labor market data stays useful without becoming fragile.

Anonymization Is Embedded Into The Data Pipeline

One of the biggest mistakes teams make is treating data anonymization as a post-processing task. That approach assumes the data will always behave as expected. In reality, data moves, gets reused, and gets joined with other sources.

By embedding anonymization directly into the ingestion and transformation layers, the data remains safe even as it flows into different systems. This design choice supports both data security and data privacy because protection does not depend on perfect usage downstream.

For people analytics and data engineering teams, this also simplifies governance. You are not relying on manual controls or assumptions. You are relying on architecture.

See What Anonymized Labor Market Data Actually Looks Like

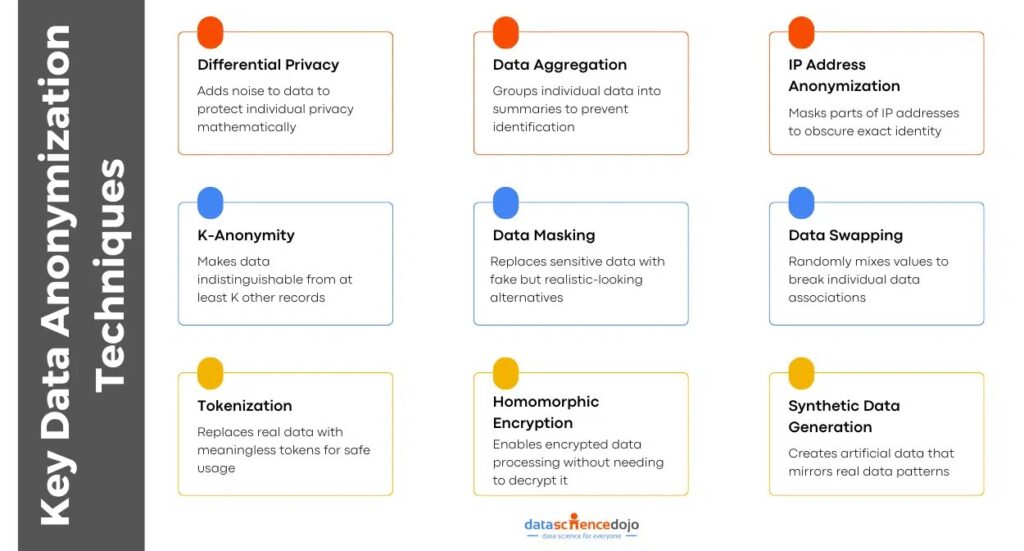

What Data Anonymization Techniques Are Used For Job And Labor Data?

Once direct identifiers are out of the picture and aggregation rules are in place, the next question usually comes from data teams: what techniques are actually doing the work behind the scenes?

There is no single switch called “data anonymization.” It is a combination of methods, each chosen for a specific type of risk. The goal is not to make labor market data vague. It is to make it safe without stripping away the signals people analytics teams rely on.

Image Source: datasciencedojo

Tokenization And Hashing Protect Sensitive Fields

Some fields still need to exist in a controlled form for the data to remain usable. Company identifiers, posting IDs, or source references are examples. These fields are useful for deduplication, tracking change over time, or quality checks, but they should never expose raw values.

That is where tokenization and hashing come in.

Instead of storing the original value, the system replaces it with a token or a hashed representation. The output cannot be reversed to reveal the original input. At the same time, the token remains consistent, which allows analysis like trend tracking or duplicate detection to work as expected.

This approach is common across secure data systems and is widely recommended by standards bodies like NIST for protecting sensitive data elements. It is a practical way to balance data security with analytical continuity.

Statistical Aggregation Keeps Insights At The Market Level

Aggregation is one of the most important data anonymization techniques for labor market data.

Rather than exposing individual postings as isolated records, insights are derived from groups. These groups might be defined by role family, geography, industry, or time window. What matters is that the output reflects patterns, not individual behavior.

For example, people analytics teams rarely need to know which specific employer posted first in a city. What they need is how demand for a role is trending across the market. Aggregation delivers that answer without introducing unnecessary risk.

Noise And Thresholding Reduce Re-Identification Risk

In some cases, even aggregated data can become sensitive if the group size is too small. This is especially true for niche roles, emerging skills, or small geographic regions.

To manage this, anonymization pipelines often apply thresholding rules. If a data slice does not meet a minimum volume, it is either withheld or rolled up into a broader category. In certain scenarios, small amounts of statistical noise may also be applied to prevent exact reconstruction of underlying counts.

These techniques are closely aligned with principles used in differential privacy research, which focuses on preventing re-identification while preserving overall trends. The intent is not to distort reality, but to prevent precision from becoming exposure.

Choosing Techniques Based On Use Case, Not Habit

One mistake teams make is applying the same anonymization technique everywhere. That usually leads to either over-protection or under-protection.

Effective job data anonymization adapts to how the data will be used. Workforce planning, skills analysis, and hiring trend monitoring all have different sensitivity profiles. The anonymization strategy should match that reality.

By combining tokenization, aggregation, and controlled thresholds, labor market data stays flexible enough for analytics while remaining aligned with data privacy and data security expectations.

Build Labor Market Intelligence You Can Trust

See how JobsPikr delivers anonymized, secure labor market data that people analytics and data engineering teams can confidently use for workforce planning, skills analysis, and market benchmarking.

How Does Encryption Protect Labor Market Data Throughout Its Lifecycle?

Encryption is one of those words that shows up in every vendor page, which is exactly why people stop trusting it.

So let me say it plainly: encryption does not magically make labor market data “safe.” It is a guardrail. A strong one, when it is done right, and when it is paired with data anonymization, access controls, and monitoring. If any one of those pieces is missing, encryption becomes a box-tick instead of real protection.

The easiest way to think about it is this. Data has two vulnerable moments: when it is moving, and when it is sitting somewhere waiting to be used. The encryption process needs to cover both.

Encryption Protects Data During Ingestion And Transfer

Labor market data travels more than most people realize.

It moves from collection systems into processing pipelines. It moves again into storage. It moves again into analytics environments, export jobs, and customer-facing delivery systems. Even if the source is public data, that movement is where risk shows up. Not because the content is secret, but because interception and tampering are possible when data is in motion.

Encryption in transit solves that. It makes the payload unreadable while it is being transferred between systems, and it reduces the chances of someone quietly copying or altering data as it flows through the pipeline.

If you have ever reviewed a data incident, you know why this matters. The breach story is rarely “someone guessed the CEO’s password and downloaded everything.” It is often something boring and operational: a misrouted transfer, a weak connection, a misconfigured endpoint. Data encryption during transfer is what prevents a simple mistake from becoming an exposure event.

Data Encryption At Rest Protects Stored Labor Data

Now for the second vulnerable moment: storage.

Once labor market data is stored, it tends to live a long time. Teams keep historical snapshots to study trends. Backups exist. Sandboxes appear. Multiple internal systems may touch the same dataset.

This is where encryption at rest earns its keep.

Data encryption at rest means that if someone gets access to the storage layer itself, the contents still do not read like text. They read like noise. The data is there, but it is not usable without the correct keys. That is a big difference, especially in cloud environments where storage and compute are constantly scaling and shifting.

Key Management Is Where “Encryption” Usually Falls Apart

This part is not glamorous, but it is the truth: the strength of encryption is often limited by how keys are handled.

If encryption keys are too widely accessible, stored in the wrong place, or never rotated, you end up with security theater. The data might be encrypted, but the doors to decrypt it are effectively left open.

Good key management means separation between data and keys, strict permissions around who and what can request decryption, and regular rotation so a single compromised key does not become a permanent problem. This is one of the first things serious data engineering teams ask about, and they are right to.

Encryption Works With Access Controls, Not Instead Of Them

One more plain point, because this is where buyers get misled.

Encryption protects data from being read by someone who should not have it. But encryption does not decide who should have it in the first place. That is access control. That is identity. That is monitoring.

If too many people, systems, or service accounts can access decrypted outputs, encryption does not save you. It just delays the problem.

That is why encryption needs to sit alongside job data anonymization and strict access rules. Anonymization reduces the sensitivity of what exists in the dataset. Encryption protects the dataset while it moves and while it sits. Access controls and monitoring define who can touch it and whether that access was appropriate.

That combination is what makes labor market data feel safe to adopt, not just safe to market.

How Is Labor Market Data Stored Securely At Scale?

Storage is where good intentions either hold up or quietly fall apart.

Most data issues do not come from dramatic hacks. They come from everyday operational reality. Multiple teams need access. Systems grow over time. Old datasets linger longer than expected. Copies appear in places no one remembers creating. If storage is not designed carefully, even well-anonymized labor market data can start to feel risky.

This is why secure storage is treated as an ongoing discipline, not a one-time setup.

Secure Storage Is About Control, Not Just Location

There is a misconception that storing data in the cloud automatically makes it secure. It does not. Cloud storage is just infrastructure. Security comes from how access is controlled, how environments are separated, and how usage is monitored over time.

For labor market data, secure storage starts with isolation. Production datasets are not mixed casually with experimentation environments. Analytics layers do not have write access to raw ingestion stores. Backups are protected with the same seriousness as live data, because a backup is still data.

This separation reduces the chance that a simple mistake in one system spreads further than it should.

Role-Based Access Keeps Data Use Intentional

Not everyone who works with labor data needs to see the same thing.

People analytics teams may need aggregated outputs. Data engineers may need pipeline-level visibility. Quality teams may need access for validation. Secure storage systems reflect these differences through role-based access.

Access is granted based on function, not convenience. Permissions are reviewed. Usage is logged. When access is no longer needed, it is removed. This may sound basic, but it is one of the most effective ways to support data privacy in practice.

It also makes audits and reviews far less painful, because the system already reflects how data is meant to be used.

Monitoring And Audit Trails Turn Storage Into A Safety Net

Storage without visibility is a blind spot.

Secure labor market data storage includes monitoring that answers simple but critical questions. Who accessed the data? When did they access it? What did they do with it? Was that behavior expected?

Audit trails create accountability without slowing teams down. They also provide early signals when something unusual happens, long before it turns into a real issue. For organizations evaluating external labor data providers, this level of transparency is often what separates “seems fine” from “we can stand behind this.”

Secure Storage Supports Long-Term Use, Not Just Delivery

Labor market data is rarely a one-off purchase. Teams use it over months or years to study trends, compare cycles, and track change. Secure storage needs to support that long-term view.

That means thinking about retention policies, historical snapshots, and controlled access to older data that still has analytical value. Data anonymization makes this safer. Secure storage makes it sustainable.

When storage is designed with both in mind, labor data stays usable without quietly accumulating risk in the background.

See What Anonymized Labor Market Data Actually Looks Like

How Do Data Privacy Principles Influence Labor Data Architecture?

This is the part most people never see, but it is where the real decisions get made.

Data privacy is not something you bolt on once the pipeline is already running. It shows up much earlier, in how systems are designed, how defaults are set, and how trade-offs are handled. Architecture choices quietly decide whether labor market data stays safe as it scales, or whether it becomes fragile over time.

At JobsPikr, data privacy principles shape the structure of the system itself, not just the policies around it.

Image Source: cyberpilot

Privacy-By-Design Shapes How Data Moves Through The System

Privacy-by-design sounds like a buzzword until you see what happens without it.

In practice, it means asking basic questions at every stage of the data flow. Do we need this field at all? Can this signal be derived at an aggregate level instead? Should this data ever leave the processing layer? Those questions influence schema design, transformation logic, and storage layout.

When privacy-by-design is taken seriously, data anonymization stops being a cleanup task. It becomes the default behavior of the pipeline. Labor market data enters the system already constrained to what is necessary for analysis, not what is technically possible to collect.

Data Minimization Reduces Risk Without Reducing Insight

One of the most effective privacy principles is also one of the simplest: collect less.

Labor market data does not need to be exhaustive to be useful. People analytics teams care about trends, distributions, and change over time. They do not need every possible attribute if those attributes do not improve decision-making.

By minimizing what is stored, the system reduces exposure naturally. Fewer sensitive combinations exist. Fewer edge cases appear. Data security becomes easier to maintain because there is simply less that can go wrong.

This is where good architecture quietly protects downstream users. The data feels clean, focused, and easier to explain internally.

Separation Of Concerns Keeps Privacy Manageable At Scale

As labor data platforms grow, different parts of the system start serving different purposes. Ingestion systems pull data. Processing layers, clean and normalize it. Analytics layers generate insight. Delivery systems expose outputs.

Privacy principles encourage keeping these concerns separate.

Raw ingestion data is not treated the same way as analytical outputs. Processing systems do not automatically inherit delivery permissions. This separation limits the spread of sensitive data and makes it much easier to reason about who can access what.

For data engineering teams, this also improves reliability. When systems have clear boundaries, mistakes are easier to contain.

Architecture Decisions Signal Maturity To Evaluators

When people analytics and engineering teams evaluate labor data providers, they often look past feature lists. They ask how the system behaves under stress, change, and scale.

Privacy-aware architecture answers those questions indirectly. It shows up in documentation, access patterns, and the absence of uncomfortable edge cases. It tells reviewers that data privacy and data security were considered from the start, not retrofitted after growth.

How Do You Keep Labor Market Data Useful After Anonymization?

This is the concern people rarely say out loud, but it is always there.

Everyone agrees that data anonymization and data privacy are important. The hesitation comes right after. If you anonymize too aggressively, do you end up with data that looks safe but tells you nothing useful? If you abstract too much, do trends disappear?

The short answer is no, not if anonymization is done with intent.

Useful labor market data does not depend on knowing who posted what. It depends on understanding patterns, movement, and change across the market. Anonymization protects identities. It does not erase signals.

Most Labor Market Insights Do Not Require Individual-Level Detail

People analytics teams are rarely trying to answer individual questions. They are asking market questions.

How is demand for a role changing over time? Which skills are becoming more common across postings? Where are hiring hotspots emerging? How quickly are employers reacting to shifts in supply?

None of these questions require personal identifiers. They require consistency, coverage, and clean aggregation. When labor data is anonymized correctly, those properties remain intact.

In fact, removing unnecessary identifiers often improves analytical clarity. The data becomes easier to model, easier to explain, and easier to defend internally.

Aggregation Preserves Signal While Reducing Exposure

Aggregation is where usefulness and privacy meet.

By grouping data at the right level, labor market data continues to reveal trends without exposing edge cases. The art is in choosing aggregation boundaries that match real-world decision-making. Geography might roll up to metro areas. Titles might roll up to role families. Time might be analyzed in weekly or monthly windows.

These choices are not arbitrary. They are based on how teams actually use labor data. When aggregation aligns with use cases, anonymization feels invisible. The insight is still there, just without unnecessary precision.

Over-Anonymization Is A Design Problem, Not A Privacy Requirement

When anonymized data becomes useless, the issue is usually design, not privacy.

Over-anonymization happens when systems strip detail without asking whether that detail is needed. It often comes from fear-driven decisions rather than thoughtful architecture. The result is data that feels “safe” but cannot answer real questions.

A better approach starts from the opposite direction. Define the decisions the data needs to support. Then anonymize everything else. This keeps labor market data both safe and sharp.

Consistency Matters More Than Raw Detail

One overlooked aspect of usefulness is consistency over time.

People analytics teams care about trends. Trends require stable definitions, repeatable transformations, and comparable outputs month after month. Data anonymization techniques that preserve consistency allow teams to trust what they are seeing, even when underlying sources change.

This is where anonymization supports labor market intelligence rather than competing with it. By stabilizing how data behaves, anonymization actually strengthens long-term analysis.

Useful Data Is Data Teams Can Defend

There is one final test of usefulness that rarely shows up in specs.

Can someone explain the data confidently in a meeting with leadership, legal, or security?

When labor market data is anonymized by design, that conversation becomes easier. Teams can talk about insights without caveats. They can share dashboards without disclaimers. They can focus on what the data shows, not on what it might expose.

That is when labor data becomes truly useful.

Build Labor Market Intelligence You Can Trust

See how JobsPikr delivers anonymized, secure labor market data that people analytics and data engineering teams can confidently use for workforce planning, skills analysis, and market benchmarking.

How Does Transparency Build Trust In Labor Market Data Providers?

Security and anonymization do a lot of heavy lifting. But on their own, they are not enough.

Trust does not come only from what a provider does with labor market data. It comes from how clearly they explain it. Transparency is what turns technical safeguards into something teams can actually believe in and stand behind internally.

When transparency is missing, even well-protected data feels risky. When it is present, trust compounds.

Clear Explanations Reduce Internal Friction

People analytics and data engineering teams rarely work in isolation. Any external labor data they bring in eventually gets reviewed by security, legal, procurement, or leadership. Those teams ask practical questions, not theoretical ones.

- Where does this data come from?

- What exactly is collected?

- What is excluded by design?

- How is data anonymization handled across the pipeline?

Clear, plain-language answers reduce friction immediately. Teams are not forced to translate vague claims into something defensible. They can point to documentation that explains how job data anonymization works, how data privacy is protected, and how data security controls are enforced.

That clarity often makes the difference between a fast approval and a stalled evaluation.

Data Lineage Makes Labor Data Easier To Trust

Another critical piece of transparency is data lineage.

Teams want to know how labor market data moves from source to insight. Which public sources are used? How often is data refreshed? What transformations are applied along the way? Where does anonymization occur?

When data lineage is documented, trust increases for a simple reason. There are fewer unknowns. Engineers can reason about the system. Analysts can explain the data. Reviewers can see that nothing questionable is happening behind the scenes.

Transparency does not mean exposing every internal detail. It means providing enough visibility for teams to understand and trust the flow of data.

Transparency Supports Responsible Downstream Use

Labor market data rarely stays in one place. It ends up in dashboards, reports, planning tools, and sometimes shared externally within an organization. Transparency helps teams understand how the data is meant to be used and where its boundaries are.

When providers clearly explain anonymization choices, aggregation levels, and intended use cases, teams are less likely to misuse the data unintentionally. That protects everyone involved, including the end users who rely on the insights.

This is an often-overlooked benefit of transparency. It acts as a guardrail, not by restricting access, but by setting expectations.

Trust Grows When Providers Are Open About Trade-Offs

No dataset is perfect. Honest providers acknowledge trade-offs instead of hiding them.

Being transparent about why certain fields are abstracted, why some granular views are unavailable, or why thresholds exist builds credibility. It signals that data anonymization and data privacy decisions were made deliberately, not accidentally.

For teams evaluating labor data in the middle of the funnel, this honesty matters. It shows maturity. It shows respect for the buyer’s intelligence. And it reinforces the idea that the provider is a long-term partner, not just a data source.

See What Anonymized Labor Market Data Actually Looks Like

What Should Teams Look For When Choosing A Secure Labor Market Data Provider?

By the time teams reach this point, the question is no longer whether labor market data is useful. It is whether the provider behind that data is trustworthy enough to rely on.

Most platforms will say the right things about data privacy and data security. The difference shows up in how specific they are, how consistent their answers feel, and how well their practices hold up under real scrutiny from engineering and security teams.

This is where evaluation needs to move beyond feature lists.

Data Anonymization Practices Should Be Explicit, Not Implied

A strong provider does not treat data anonymization as a footnote.

Teams should be able to understand, clearly, how job data anonymization is handled at each stage of the pipeline. What data is collected? What is deliberately excluded? Where does anonymization occur? Which data anonymization techniques are applied, and why?

Vague assurances are not enough here. If anonymization is core to the product, it should be easy to explain without deflection or marketing language. That clarity signals that privacy was designed in, not layered on later.

Security Architecture Should Be Reviewable By Technical Teams

Security claims matter most when engineers can reason about them.

A reliable labor market data provider should be able to explain how data encryption is applied, how access is controlled, and how storage environments are separated. This does not require exposing sensitive internals, but it does require coherence. The story should make sense to someone who builds systems for a living.

If security explanations feel hand-wavy or inconsistent, that usually points to gaps behind the scenes. Solid architecture can stand up to calm, detailed questions.

Transparency And Documentation Reduce Buyer Risk

From a buyer’s perspective, documentation is not paperwork. It is risk reduction.

Clear documentation around data sources, anonymization choices, refresh cycles, and intended use cases makes it easier to get internal approval. It also makes long-term use safer, because teams know the boundaries of the data they are working with.

Providers that invest in transparency make life easier for their customers. That alone is a strong signal of maturity.

Balance Matters More Than Absolutes

The safest dataset in the world is useless if it cannot answer real questions. The richest dataset is dangerous if it creates privacy exposure.

Strong labor market data providers find the balance. They protect privacy through data anonymization and data security while preserving the analytical depth teams actually need. They are comfortable explaining trade-offs and standing by them.

That balance is what turns labor data into a dependable input for people analytics, not a short-lived experiment.

Trust Shows Up Over Time, Not Just During Sales

Finally, trust is not something you validate once.

As teams use labor market data over months and years, trust shows up in consistency. Definitions stay stable. Access remains controlled. Security practices evolve as standards change. Questions are answered directly.

When intelligence and trust move together, labor market data becomes something teams can build on, not work around.

See What Anonymized Labor Market Data Actually Looks Like

Why Trust And Intelligence Must Move Together In Labor Market Data

Labor market data only delivers value when teams feel confident using it, sharing it, and standing behind it internally. That confidence does not come from volume or coverage alone. It comes from knowing the data was collected ethically, anonymized intentionally, secured properly, and designed to stay useful without crossing privacy lines.

Data anonymization, data security, and data privacy are not constraints on insight. When done right, they are what make insight possible at scale. They allow people analytics and data engineering teams to focus on understanding the market, not defending the data. That balance, between protection and usefulness, is what turns labor data into long-term intelligence rather than short-term signal.

Build Labor Market Intelligence You Can Trust

See how JobsPikr delivers anonymized, secure labor market data that people analytics and data engineering teams can confidently use for workforce planning, skills analysis, and market benchmarking.

FAQs

What Is Data Anonymization In Labor Market Data?

Data anonymization in labor market data refers to the process of removing or transforming information so that no individual or sensitive entity can be identified. In practice, this means excluding direct identifiers, aggregating data to market-level views, and applying techniques that prevent re-identification. The goal is to protect privacy while still allowing teams to analyze trends, demand, and workforce signals.

How Is Job Data Anonymization Different From Data Masking?

Job data anonymization is not the same as masking or redaction. Masking typically hides values while keeping the original data structure intact, which can still pose risk if the data is joined or reused. Anonymization changes how data is collected, stored, and shared so that sensitive identifiers never exist in usable form. This makes anonymized labor data safer for long-term analysis and wider internal use.

Is Anonymized Labor Market Data Still Compliant With Data Privacy Standards?

Yes, anonymized labor market data aligns well with modern data privacy expectations when done correctly. By focusing on public data, minimizing collection, and preventing re-identification, anonymization supports compliance with global privacy principles. More importantly, it reduces practical risk for teams using the data across analytics, reporting, and planning workflows.

How Does Data Encryption Support Labor Data Security?

Data encryption protects labor market data when it is moving between systems and when it is stored. Even if someone gains unauthorized access to infrastructure, encrypted data remains unreadable without proper keys. Encryption works alongside data anonymization and access controls to create layered data security, rather than relying on a single safeguard.

Can Anonymized Labor Data Still Deliver Useful Insights?

Absolutely. Most labor market insights do not depend on individual-level detail. Workforce planning, skills intelligence, hiring trends, and benchmarking all rely on aggregated patterns, not identities. When data anonymization is designed around real use cases, labor data remains highly useful while staying safe and defensible.